Rule Deck Comparison Doesn’t Have to be Difficult

By Saunder Peng

Comparing results from different rule decks can be frustrating. Learn how you can use a chip finishing tool and scripts to automate the process and ensure accurate results.

Foundry rule decks change all the time, as foundries uncover new manufacturing issues, or the process changes, or design criteria are tightened to improve runtime or stability. Sometimes new versions of a user’s design rule checking (DRC) tool are released, and the results from the DRC run differ from the previous version. Or perhaps a company wants to compare results between rule decks from different foundries. Whatever the cause, designers must be able to accurately identify and understand the differences to determine if and how their designs are affected, and to decide whether to implement any new requirements to maintain DRC-compliant tapeouts, or waive the new errors if the impact does not affect the manufacturability of their design.

Of course, that’s easier said than done. Rule decks are getting larger and more complex every day, so making simple visual comparisons between versions is nearly impossible. In fact, sometimes the changes are buried inside the rule deck code, which isn’t even accessible to users. In many cases, the rule deck changes won’t have any effect on customer designs, but users must still analyze and understand the changes before they can make that decision.

Typically, users apply a new rule deck to their design, or implement the new version of the DRC tool, then examine the results database for differences (new DRC violations). The challenges are 1) to compare two different results databases and identify true differences in the results, and 2) to understand if these differences result from the changes in the rule deck or tool version, or were simply caused by human error or misapplication. So, how can users accurately compare two sets of results and determine that any differences in results are true DRC errors that require layout changes?

Let’s walk through a typical comparison process, using Calibre tools, since that’s what I work with every day. I’ll explain what not to do, then show you a process that can help you perform these comparisons with significantly less impact on your time and resources.

When designers are running DRC, results are normally output to an ASCII file. Outputting the results to an ASCII file enables quick viewing of the DRC results in a results database viewer, such as Calibre RVE™. With Calibre RVE, a user can simply double-click on any result line to view additional detail that can help in debugging the error.

Running a design with both decks, then comparing results seems logical, but in practice, it doesn’t work. DRC tools like Calibre DRC don’t run in the exact same sequence each time, so there is no guarantee that results will appear in the same order. In addition, Calibre DRC reports hierarchically—that is, it reports one result for all cells with the same design—but it doesn’t guarantee which cell it will choose to report on from one run to the next. This variation between runs makes direct comparisons of two results databases virtually impossible.

Of course, designers can use a single command at the top of the DRC run file to select a Graphical Database System (GDS) or Open Artwork System Interchange Standard (OASIS) format in place of the ASCII format for output, but if they use this option, all results are output into a single layer. Adding commands that tell the tool which results go in which layer requires modifying the foundry rule deck, which designers usually avoid, because it is the single authorized and verified set of requirements for DRC compliance. In addition, once you modify a rule deck, you may create additional, unanticipated changes in behavior. It becomes very difficult to determine which changes resulted from true differences between the decks, and which were introduced by the modifications made in the deck.

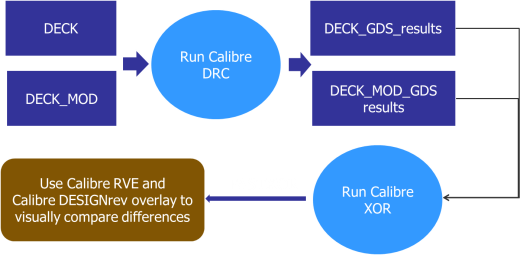

Figure 1. Users can output DRC results directly to a GDS or OASIS format, but that requires changing the foundry rule deck.

A better option is to complete the DRC runs as usual, then use a chip finishing tool like Calibre DESIGNrev instead to convert the two ASCII error results files into a GDS or OASIS format. The ASCII results database contains the rule check names, and the locations or regions of the errors. When Calibre DESIGNrev loads the results database, the check name becomes the layer name, and the region becomes the polygon on the layer.

Calibre DESIGNrev can help users perform the layer mapping to match up results layer by layer, even if the order is different. It converts design results from hierarchical to flat in the following order:

- It converts the results database from ASCII to GDS/OASIS.

- It maps the results to layers.

- It converts the hierarchical results to flat results.

The user can then feed each file into Calibre DESIGNrev again, along with the original design GDS/OASIS database (which contains cell locations) to obtain two “flat” files showing the cells in all locations. Now the user can run a comparison function to find the differences for further analysis.

Even better, designers can use scripts to perform the entire conversion and comparison without user intervention, as part of the DRC run. Using scripts ensures the process is performed identically for each run, and that no step is missed that might corrupt the results. In addition, these scripts can be saved and used for any future comparisons.

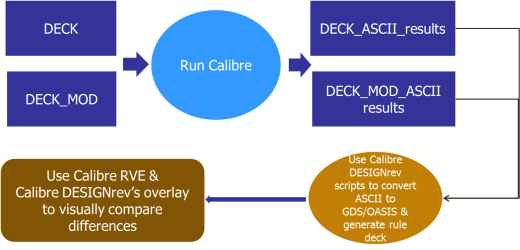

Figure 2. Using Calibre DESIGNrev to convert the ASCII results databases after the DRC run is not only exponentially faster, but it also does not require any rule deck modification.

After performing the comparison, users can use Calibre RVE to compare areas in two different result databases by overlaying the designs and toggling between them to see the differences in error results. Designers can also use the Calibre dbdiff function to generate a “rule deck” with a map option to show which rule checks match the dbdiff results.

Running an entire rule deck takes a long time, and modifying a foundry rule deck is generally not good design practice. The process of converting ASCII to GDS/OASIS, merging with the original design GDS, and running a dbdiff is very fast (often just minutes). When you can get the same results faster, and without any deck modification, doesn’t it makes sense to use that approach?

Author

Saunder Peng is a technical marketing engineer in Mentor Graphics’ Design to Silicon Division

This article was originally published on www.semiengineering.com