Mixed Reality needs Mixed Signal

Empowering the Design and Verification of Mixed-Signal SoCs for Advanced Spatial Computing with Symphony Pro

We are on the cusp of a new era with the emergence of spatial computing, enabling machines to perceive and interact with the physical environment in a remarkably intuitive and natural manner. Mixed reality is the term used to describe the combination of augmented reality (AR) and virtual reality (VR) in a single device. AR overlays digital information on top of the real world, while VR immerses the user in a simulated environment. Both technologies require high-performance sensors to capture and understand the real world, as well as to track the user’s position, orientation, eye gaze, and hand gestures. These sensors rely on analog and mixed-signal circuits to convert physical signals into digital data that can be processed by the device.

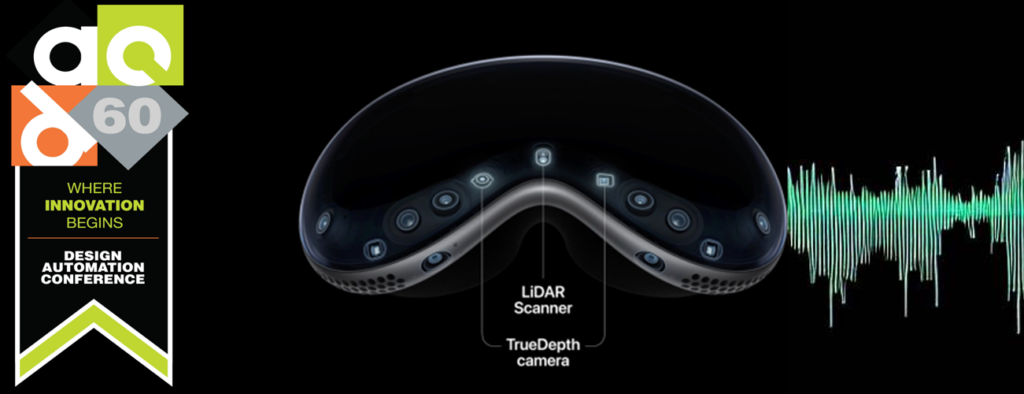

One of the most anticipated mixed reality devices is Apple’s Vision Pro, which was unveiled at WWDC 2023 and is expected to launch in early 2024. The Vision Pro is a headset that looks like a pair of ski goggles, with a tinted front panel that acts as a lens and a display. The headset is powered by two chips: the Apple M2, which handles general computing tasks, and the R1, which handles spatial computing tasks.

The Vision Pro has a total of 23 sensors, including 12 cameras, five lidar sensors, and six microphones [1]. These sensors enable the device to create a 3D map of the environment, track the user’s head, eyes, and hands, and provide spatial audio. The sensors also enable a unique feature called EyeSight, which projects a live feed of the wearer’s eyes to the external display, making the headset look transparent [2].

The sensors in Vision Pro use analog and mixed-signal circuits to convert light, sound, and motion into electrical signals that can be digitized and processed by the R1 chip. For example, the cameras use image sensors that have an array of pixels, each with a photodiode that converts light into current and an amplifier that converts current into voltage. The voltage is then converted into digital bits by an analog-to-digital converter (ADC). Similarly, the lidar sensors use laser diodes that emit pulses of light and photodetectors that measure the time it takes for the light to bounce back from an object. The time-of-flight data is then converted into digital bits by an ADC.

Analog and mixed-signal circuits are essential for mixed reality devices because they bridge the gap between the physical world and the digital world. They enable the device to sense and interact with the environment, as well as to provide feedback to the user. Without them, mixed reality would not be possible.

However, designing analog and mixed-signal circuits for mixed-reality devices is not trivial. They must meet several challenges, such as:

High performance: The sensors must capture high-resolution images, accurate depth measurements, and precise motion tracking at high frame rates and low latency. This requires fast and efficient analog and mixed-signal circuits that can handle large amounts of data with minimal noise and distortion.

Low power: The sensors must consume minimal power to extend the battery life of the device. This requires analog and mixed-signal circuits that can operate at low voltages and currents, as well as use techniques such as power gating, clock gating, and dynamic voltage scaling to reduce power consumption.

Small size: The sensors must fit within the limited space of the device. This requires analog and mixed-signal circuits that can be integrated into a single chip or module, as well as use techniques such as scaling, stacking, and packaging to reduce size.

Mixed reality is an exciting technology that promises to revolutionize various domains such as entertainment, education, health care, and industry. However, designing a mixed-signal SoC that progresses from a conceptual idea to a high-yielding silicon solution, meeting stringent performance and power specifications requires the implementation of robust verification methodologies and tools. To address this need, Siemens EDA has developed Symphony Pro an innovative mixed-signal verification platform. By leveraging Symphony’s capabilities, designers can unlock the potential of their mixed-signal SoCs, enabling efficient and reliable verification, thereby ensuring the successful realization of these advanced spatial computing systems.

Join me at Siemens EDA DAC 2023 session “Empowering the Design and Verification of Mixed-Signal SoCs for Advanced Spatial Computing with Symphony Pro” at Booth 2521 on July 10th at 4:30PM

[1] https://techcrunch.com/2023/06/05/apple-vision-pro-everything-you-need-to-know/

[2] https://mixed-news.com/en/apple-vision-pro-eyesight-explanation/