OS influence on power consumption

Power consumption is an issue. With portable devices this affects battery life. [I am irritated by the short intervals between necessary charging sessions with my phone. On the other hand, my netbook can run for over 7 hours on a charge, which is great. Likewise, my newly-acquired iPad seems to perform well.] With mains powered equipment, power consumption is also a concern for environmental reasons.

The matter of power has always been seen as a “hardware issue”, but, of late, there has been an increasing interest in the role of software …

It is clear that software can take an active role in managing power consumption, if the right capabilities are provided. For example, parts of a system may be switched on and off, as required. The clock speed of a processor may be adjusted according to the amount of work it needs to do at a particular moment. The clock frequency can have a very significant effect upon power consumption.

Another, more simplistic argument is based upon selection of operating system. A traditional RTOS [like Nucleus] is likely to have a significantly smaller memory footprint than Linux, so less memory needs to be fitted. As memory consumes power, less memory means less power consumption. This argument is sound, but may be countered by the assertion that memory power consumption is really not too bad.

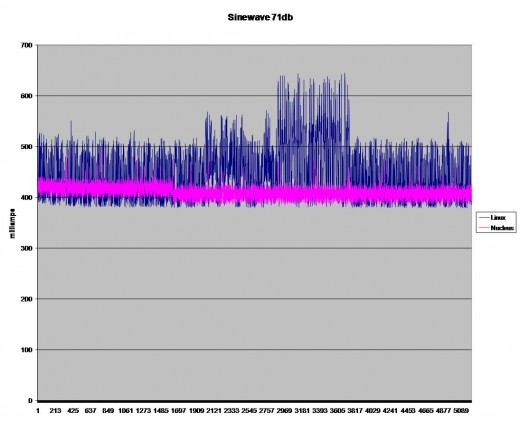

Last week, I was working with my colleague Stephen Olsen, who has been doing some research into these matters. He showed me that there is an even simpler perspective: the amount of power the CPU consumes, when doing a particular job, will vary depending upon the OS. His experiment was conducted using a media player device. It was decoding an MP3 of a 71db 220Hz sine wave. He ran the code twice: the first time executing the application under Nucleus, the second using Linux. The power consumed was measured and plotted over time.

On the plot power consumed, when running Nucleus, is shown in pink; Linux is dark blue. The results are interesting: when running Linux the CPU was consuming nearly 25% more power than when it was doing the exact same job under Nucleus.

There is also an increase in power consumption of another 20% [between about 3000 and 4000 on the plot] when running Linux. What caused this? We do not know. Obviously Linux was doing something in this time frame, which affected it CPU load.

This experiment set out to see whether a conventional RTOS really did benefit a design in terms of power consumption. It succeeded in this goal. It also illustrated quite graphically the non-real-time [i.e. unpredictable] behavior of Linux, which may be a problem for certain applications.

Comments

Leave a Reply

You must be logged in to post a comment.

Hi Colin,

I was reading your post and results with great interest. Next to the impact of the OS on the power consumption for a device running the same application, you highlight important challenges that software developers face today when trying to get power under control:

1) Being able to quantify power consumption for software alternatives

2) Being able to identify the cause for a power increase in the software code

With regard to 2, there are again to major aspects to consider for software implementation, analysis and optimization:

a) Understand and control the system power management (software controls what goes on/off, when and why): The analysis and optimization also has to be conducted with the awareness of usage scenarios. Depending on what the user is doing, components may be in a different state. E.g. if I am only listening MP3, the brightness of the LCD should be dimmed as long as I am not operating the phone etc. Those power managers (such as in Android) are quite complex heuristics and (I think) extremely hard to get optimal (what is optimal?).

b) Understand and control the dynamics between the functional entities in the layers of a software stack: As far as I understand this is what your case is pointing to.

Unfortunately, I am not knowledgeable on Nucleus, but I want to provide some data on the Linux case. Therefore, I have tried to reproduce a similar scenario using a Virtual Prototype that is giving me the required visibility to understand what is going on. Of course, this is just exemplary and not a apple-to-apple comparison. E.g. my VP mimics and ARM9 Realview Baseboard, 1Khz timer, 200Mhz, Linux 2.6.23, mplayer, 100Hz sine MP3 etc. Thus, I am sure it is very different from your setup. But, looking at the analysis it is still relevant to understand the dynamics of Linux. I have posted the results here:

http://groups.google.com/group/virtual-platform-users/web/mplayer100Hz_linux26_fig1.png

The analysis shows a context/process trace over time. As soon as the mplayer is started we can observe a multitude of data- and prefetch aborts. These are artifacts (page faults) triggered by the MMU as the data and process have to be mapped first. Once the process is mapped, we can only observe data-aborts (MP3 data page faults) and timer interrupts disturbing the mplayer process. However, after a certain time we can again identify a series of pre-fetch aborts which disturbs the mplayer again. In my case, the core is not able to get into idle at any time, thus the CPU is fully loaded with the decoding task. Now what can a software developer conclude from that:

– Is the periodic timer really required, should I go tickless?

– What is the cost of an OS that uses an MMU (page faults)

– Why is the scheduler not letting the CPU going idle, should I use mutex instead of spin-locks

– Etc.

I just thought it might be interesting to show how a VP can complement visibility to what you can measure with real hardware. I have

Best regards,

Achim Nohl

Excellent contribution Achim. Thanks.

Not long ago, while building a basic MP3 player, I was assuming that when an MP3 player is running, the device power consumption will be constant. But to my surprise it showed up that some of the music tracks consumed more current than others. On careful listening (not testing) the secret is out. It was because the music that required greater current had high frequency audio content (rap, rock and hip-hop), whereas the music that had low frequency audio content always consumed less current (soft melodies). I was surprised. So, the moral of the story is that melodies are not just good for your heart and brain, but is also good for your smartphone batteries. 🙂

@Vasanth – That makes sense. Even at the relatively low frequency of audio, the generation of a high frequency uses more power than a low one.