Designing power management software for embedded systems

An increasingly important factor in embedded software development is power management. Historically, this was a solely hardware issue; that has all changed in recent years. Once the software has the ability to take control of factors that affect the system’s power consumption, it is logical that it should exercise that control in an efficient way.

An increasingly important factor in embedded software development is power management. Historically, this was a solely hardware issue; that has all changed in recent years. Once the software has the ability to take control of factors that affect the system’s power consumption, it is logical that it should exercise that control in an efficient way.

Much is said about the implementation of power management software, but less is said about its design …

There are a few key concepts that need to be understood when discussing power management software.

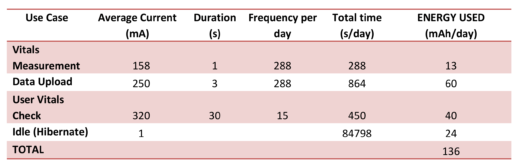

The first is the idea of a Use Case. This is a scenario of operation of the device. It may be the performance of a specific operation – like displaying some data, or taking a sample from sensors or sending data over a network. It could also be an idle state, when the device is just waiting for something to happen. A use case may be initiated by a human user, but could equally be triggered by an external event or a timer.

Each use case puts a number of demands on the system. A specific amount of CPU power is required to do the job in hand. By using DVFS [Dynamic Frequency and Voltage Scaling], the power management software can select a voltage/frequency combination that delivers just the necessary computing power, thus minimizing power consumption. These parameters are called an Operating Point. In addition, each use case will require access to a different set of resources – peripheral devices etc. – and these can be selected [powered up] by the power management software, as required.

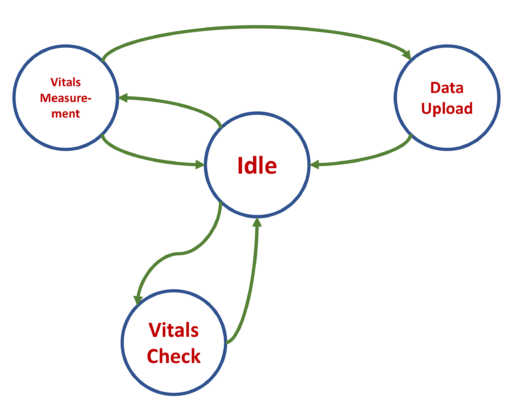

So, the implementation of an application using power management is a question of transitioning between each use case – the PM software takes care of selecting the operating points and resource availability. It occurred to me that this kind of structure was very familiar. It sounds like a state machine. So, it must make sense to use a design approach – UML comes to mind – where a state machine is a possible paradigm. Just a thought …