Mixed-Signal Verification make SENSE for MEMS

Invensense OnDemand Webinar

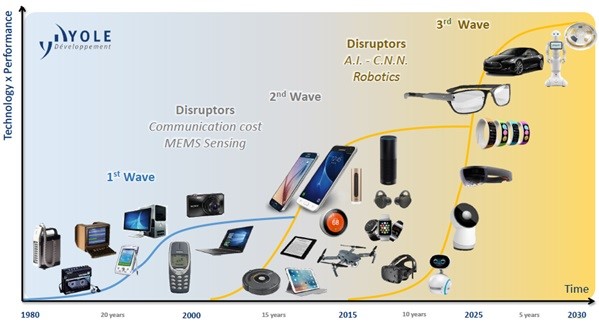

The automation age is here. Sensors play a key role in bonding the analog world to the digital world, and their deployment is enabling smarter cities, homes, factories as well as smarter cars and personal devices. MEMS, sensors and actuators are approximately 10% of the global $465 billion semiconductor market in 2018 and is expected to grow at an 8.2 percent CAGR by 2024, according to Yole Development.

The growth of sensors and actuators has happened over many decades. The story begins well before the rise of electronics. In 1923, Bosch introduced a bell that warned motorists if a car’s tire lost air pressure. Known as “The Bosch-Bell”, it was a simple but clever design. Mounted on the inner part of each rim, the bell began touching the ground if the tire pressure got low and consequently rang once per rotation.

Technology has come a long way. The first wave of microelectromechanical systems (MEMS) entered mass production in 1995 when automakers started deploying MEMS sensors for auto safety and comfort features such as ABS braking, electronic stability, air-bag deployment, tire-pressure monitors (no big bell after all).

The second wave of MEMS sensors arrived on the market in the late 2000s with a wide array of consumer electronic goods including smartphones and tablets. In 2004, the iPhone edge contained 5 sensors. By 2014, the number had increased to 12 in the Samsung S5 and iPhone 5. It is predicted that by 2021, the number of sensors will have climbed to 19-20.

The third wave of MEMS sensors is well on its way with AI-based applications. In the personal health space sensors are capturing volumes of data, including location and motion awareness — determining whether a user is sitting, walking, running or sleeping. Makers of smartphones, wearables and health trackers, along with application developers, are all clamoring for this data because it helps them analyze real-world user behavior using machine learning. AI can detect any anomalies in user behavior and can flag potential health risks.

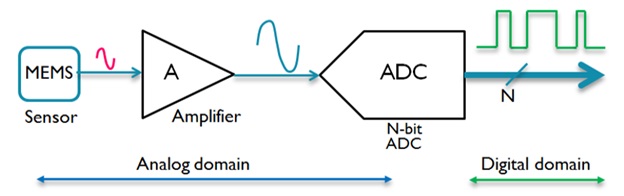

Today’s semiconductor sensors SoCs have six key requirements: higher accuracy, lower power consumption, compactness, higher reliability, higher energy efficiency, and integrated intelligence. These SoCs consist primarily of a MEMS sensor and interfacing mixed-signal circuitry. The signal coming from the sensor goes into a conditioning block and then analog-to-digital conversion is performed with high precision digitally calibrated multi-slope ADC. SoCs should have the capability to perform real-time calibration to ensure an optimal user experience. To verify this complex scenario verification teams need to run an increasing number of spice-accurate mixed-signal simulations at both the top and the sub-system level.

However, running full transistor-level simulations to verify ADCs together with digital control logic can be painstakingly slow and impractical. In this on-demand webinar, we discuss how Invensense (TDK), one of our customers, addressed these challenges using the advanced functionality in Mentor’s Symphony Mixed-Signal Verification Platform

Before we leave you here with this informative webinar, here is a fun fact – currently, sight-based sensing dominates sensing technologies. Next, comes hearing, followed by touch/feel, and smell. Taste, the most complicated of all, is still a sense to be conquered in the MEMS and sensors world. Something machines can’t cherish as of now – Go have a doughnut 🙂

Comments

Leave a Reply

You must be logged in to post a comment.

Congratulations Sumit,this is a wonderful blog Full of knowledge filed with fun.