Using Multibody Simulation in AR

Technische Universität Darmstadt

Department of Computer Integrated Design

Johannes Olbort, M.Sc. ; Prof. Dr.-Ing. R. Anderl

Using Multibody Simulation in Augmented Reality

The current state of the art allows to transfer Multibody Simulations (MBS) from a simulation program to the AR. This has some advantages and allows for example the exact simulation of CAD models in reality by means of an AR device. In the following blog I will show you how to transfer a MBS of a CAD model from Siemens NX to the AR of the Microsoft HoloLens. Thereby the MBS definition from NX is taken over and made available to the HoloLens.

Basics: Transfer of simple CAD models into the AR

The basis for the transfer from a MBS to the AR is firstly the fact, that simple CAD models can be transferred to the AR of the HoloLens. In earlier research work this was investigated with regards to the most suitable programs and tools. Without going into too much detail, we have developed a process chain that transfers CAD models into the AR of the HoloLens. The process chain starts with a CAD program, for example Siemens NX, and is transferred to a Game Engine via a DCC program, for example 3ds Max. When using a Game Engine, you can compile an application for the HoloLens directly. As a Game Engine Unity has proven to be the most suitable. Using this process chain, CAD models can now be transferred directly as applications to the HoloLens. Interesting in this respect is the so-called JT Open Plugin, which enables the import of JT-files into Unity. This enables JT-files to be displayed in the HoloLens.

In current research work we are dealing with extensive process chains, where CAD models contain a MBS or further information. As an example, we will now look at a factory planning scenario, where it is important to simulate the movement of robots in reality using the HoloLens.

The Process chain from CAD to MBS to AR

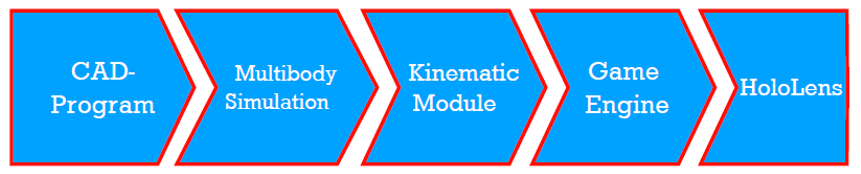

The difference between the import of a pure CAD model and the import of a CAD model with a defined MBS is the so-called Kinematic Module, which must be created in this context. The Kinematic Module creates an interface to transfer information into the AR. The informational flow of the data can be shown with activity charts, as we see below:

Here we proceed similar to the basic approach above, but we do this without a DCC program and instead insert the Multibody Simulation together with the Kinematic Module.

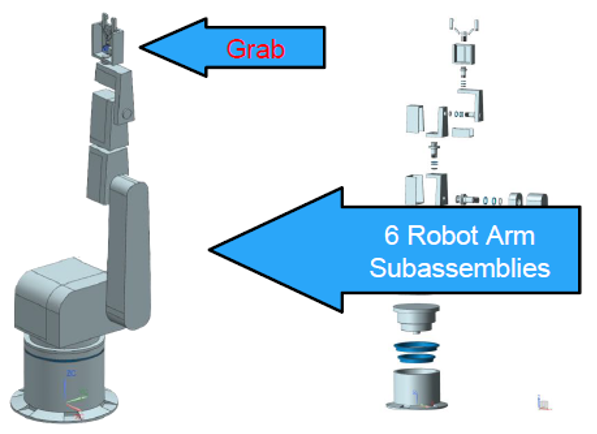

We build a robot arm consisting of 6 separate assemblies and a gripper in Siemens NX. The motion body and the joints and drives are then defined for the Multibody Simulation. This allows the necessary calculations for the simulation to be carried out. In any case, the results should be evaluated to avoid unpleasant surprises.

Now we’ll get started on the Kinematic Module. The Kinematic Module essentially has three parts: the Reader, Converter and the Factory. The Reader reads XML files and gives a list of joints as output. The Converter then transfers the vectors of the Game Object into a coordinate system, whereupon the Factory creates the joints and defines the properties. It is only necessary to read in and adjust the data of the Multibody simulation. The Kinematic Module then creates all necessary kinematic elements autonomously.

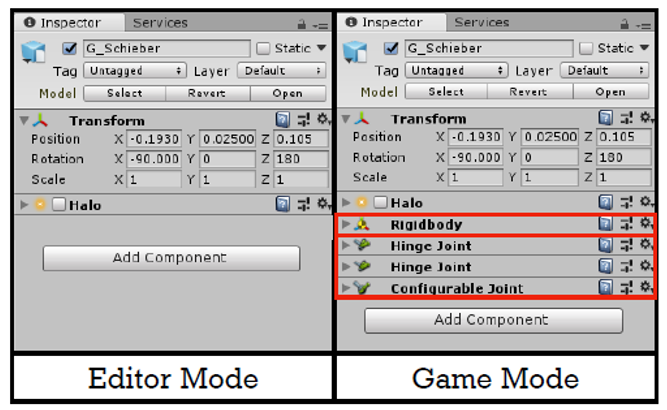

This created data can now be exported and inserted into Unity. During a test run, you can see the created joints in Game Mode. The robot arm can be controlled and new positions of the Multibody system can be set easily.

This process however is very time consuming due to the high number of degrees of freedom and the restricted functions of the Game Engine environment. Also, if a strong limitation of the degrees of freedom is reached, this often results in an unrealistic behavior of the Multibody system due to the segregation of the joints from each other.

Now you can use the MBS for further projects in Unity. Since we are pursuing the goal of transferring the MBS to the HoloLens, some HoloLens specific settings will be set and the MBS will be transferred to the HoloLens using the Universal Windows Platform. The final results can be seen in the following video: