Apollo 13: The first digital twin

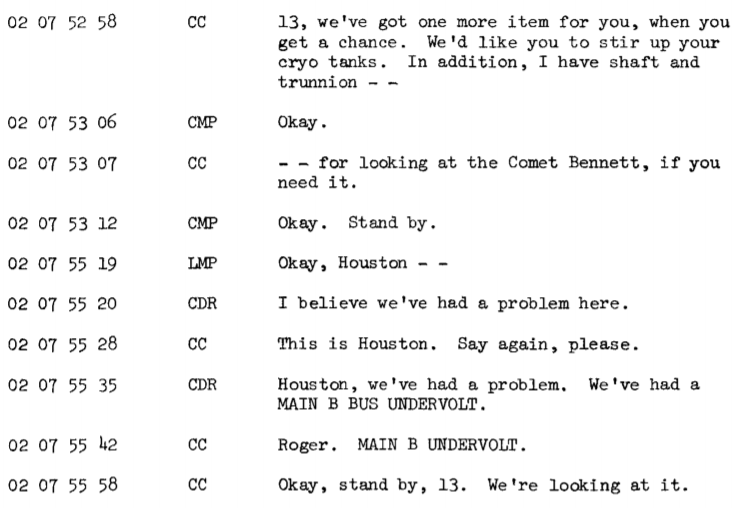

50 years ago today, 210,000 miles away from earth (about 330,000 km), three astronauts were suddenly disturbed by “bang-whump-shudder” that shook their tiny spacecraft. One of the astronauts saw the hull physically flex. Within seconds, the cabin was illuminated with warning lights, and a cacophony of alarms began to ring in the ears of the astronauts.

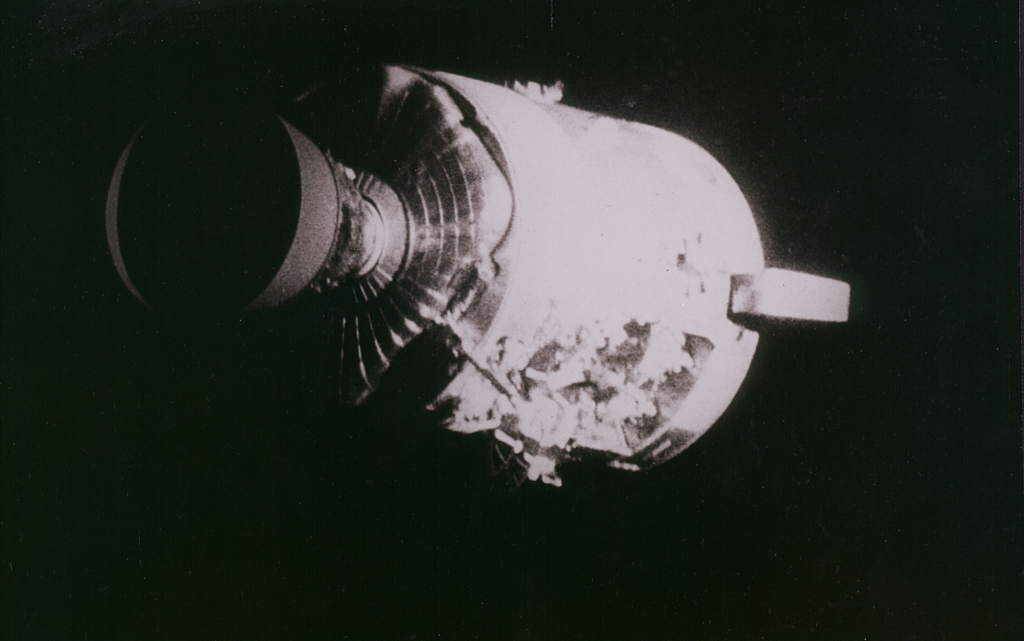

Something was wrong, something was seriously wrong but unable to see outside of the craft, they were unable to see the full extent of the terrible damage.

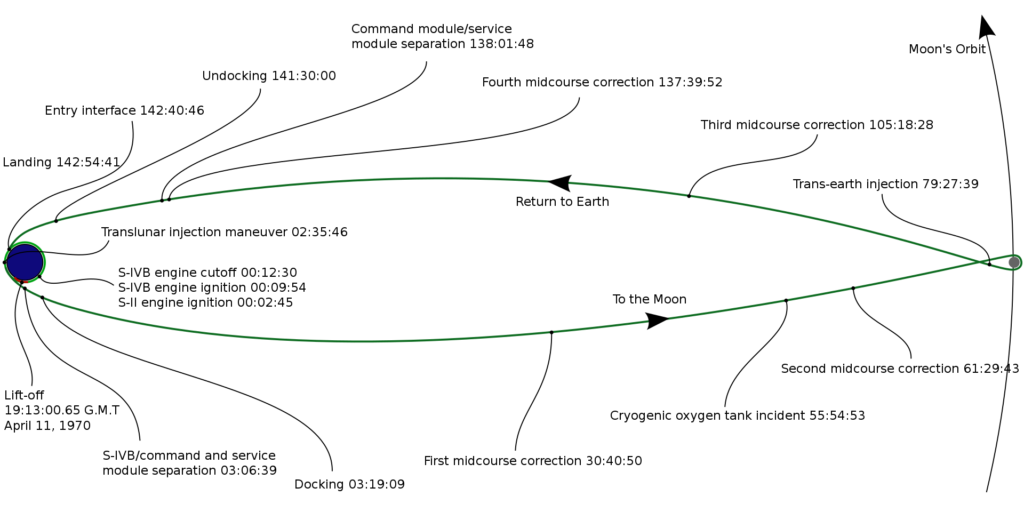

What they didn’t yet know, and wouldn’t finally know until after they jettisoned the service module in the moments before reentry, was an explosion in the oxygen tanks had critically damaged their main engine and left their valuable oxygen supplies leaking into space.

With every minute that passed the crippled spacecraft moved another 400 miles away from earth. In the history of our species, no-one had been in this much trouble so far away from home. Indeed, a few hours later they would be further from home than anyone has ever been, either before or in the 50 years since.

Although spacecraft bound for the moon typically do so on a “free return trajectory”, that will eventually bring them back into near-earth orbit, the previous maneuver burn executed by Apollo 13 several hours before (intended to improve lighting conditions for their landing) took them off this path. On their current trajectory – and without further burns, from their now crippled engine, they would never come closer than 45,000 miles to their home planet, stuck in an elliptical orbit around it for eternity.

Digital twin to the rescue

How do you diagnose, and solve, the problem of a failing physical asset that is 200,000 miles away and outside direct human intervention (other than from the three astronauts trapped inside who could not even see the damage that had been caused by the explosion)?

In the first moments after the explosion, Mission Control struggled just to keep the astronauts alive, conscious that any wrong decision might cause further terminal damage to the fragile spacecraft. Mission Control and the astronauts worked around the clock.

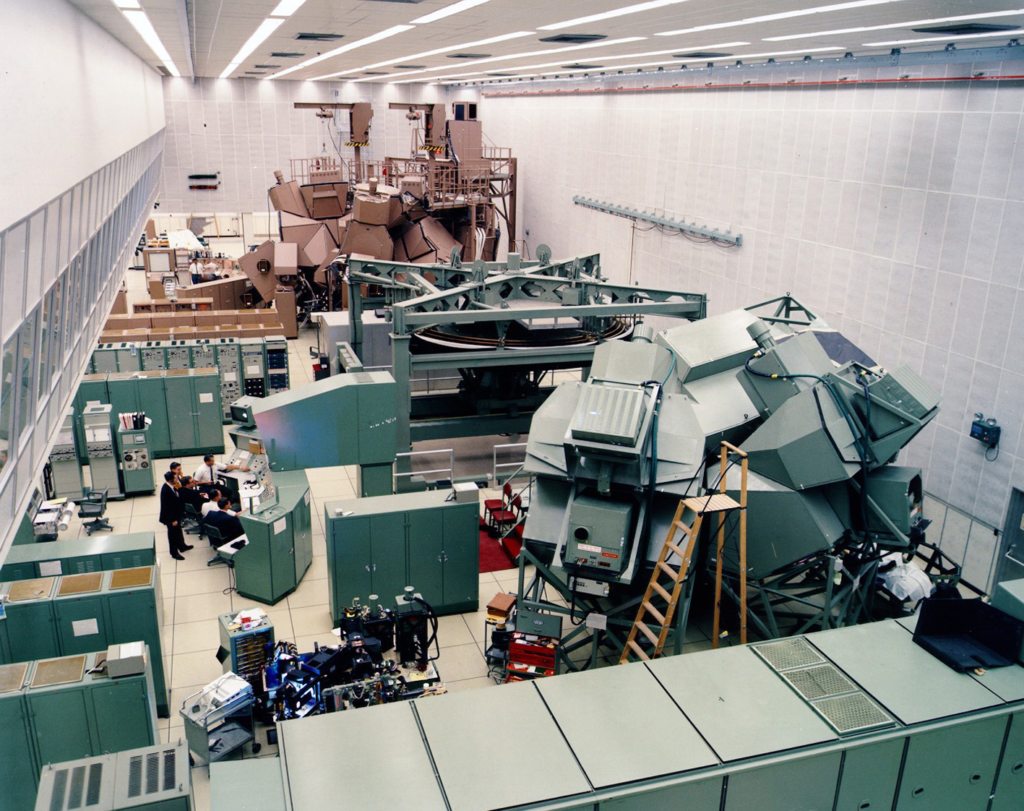

Behind the scenes, at NASA there were 15 simulators that were used to train astronauts and mission controllers in every aspect of the mission, including multiple failure scenarios (some of which came in useful in averting disaster in both Apollo 11 and 13).

“The simulators were some of the most complex technology of the entire space program: the only real things in the simulation training were the crew, cockpit, and the mission control consoles, everything else was make-believe created by a bunch of computers, lots of formulas, and skilled technicians”.

Gene Kranz, NASA Chief Flight Director for Apollo 13

To bring the astronauts home, mission control and the astronauts would have to work together to figure out how to maneuver and navigate a badly damaged spacecraft operating in an unusual configuration well outside of its design envelope. They would have to find innovative ways of conserving power, oxygen, and water while keeping astronauts and spacecraft systems alive. And finally, they would have to work out how to restart a command module that was never designed to be switched off in space.

Although they obviously weren’t called that at the time, my contention is that these simulators were perhaps the first real example of “digital twins”. I’ll explain in this blog how these high-fidelity simulators and their associated computer systems were crucial to the success of the Apollo program, and how 50 years ago their flexibility and adaptability helped to bring three American astronauts safely home from the very brink of disaster in deep space.

Of course, by itself, a simulator is not a digital twin. What sets the Apollo 13 mission apart as probably the first use of digital twin, is the way that NASA mission controllers were able to rapidly adapt and modify the simulations, to match conditions on the real-life crippled spacecraft, so that they could research, reject, and perfect the strategies required to bring the astronauts home.

But before we explore the critical role these prototype “digital twins” played in the rescue of Apollo 13, it’s worth examining how these simulators helped prevent disaster for both Apollo 11 and 13 even before the launch of either mission.

Use before launch

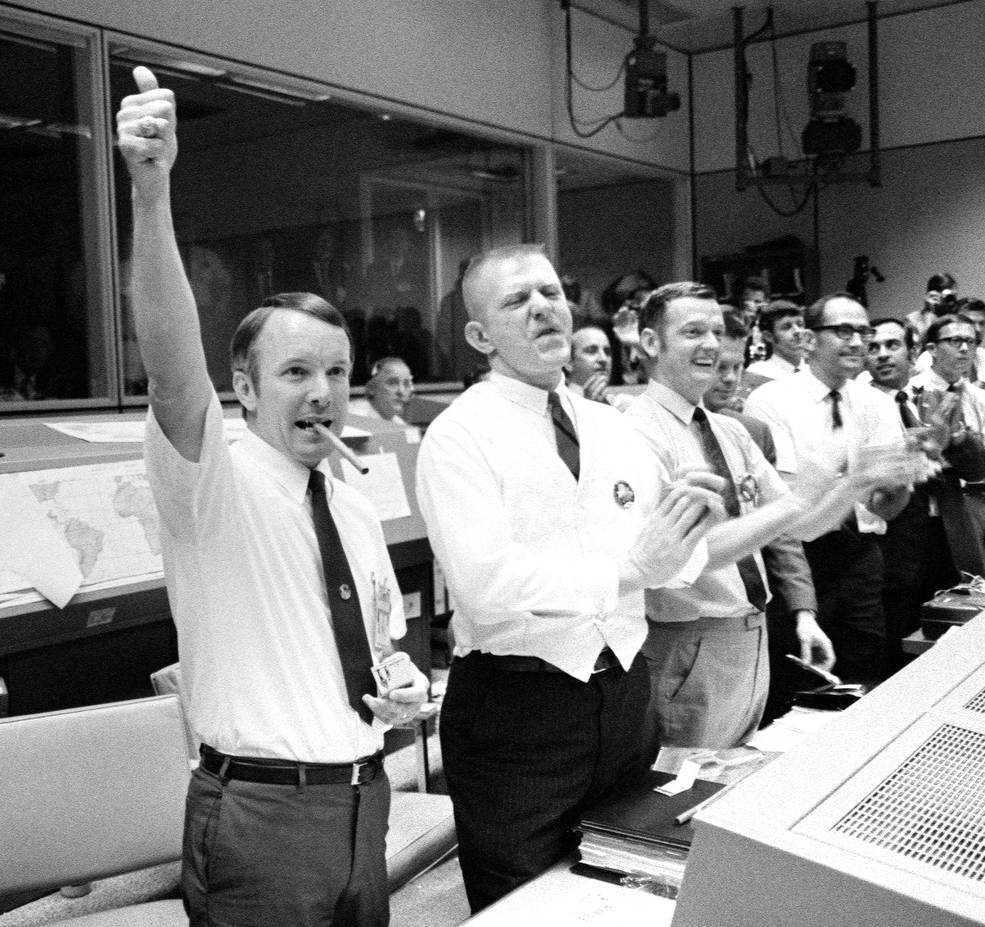

If you listen to the audio recordings of the flight controller loops immediately after the explosion, what strikes you most is the sense of controlled calm that is maintained throughout the incident. No-one panics, no-one loses their temper, all that you can hear is calm reasoned decision making in the face of a terrible and potentially tragic unfolding situation.

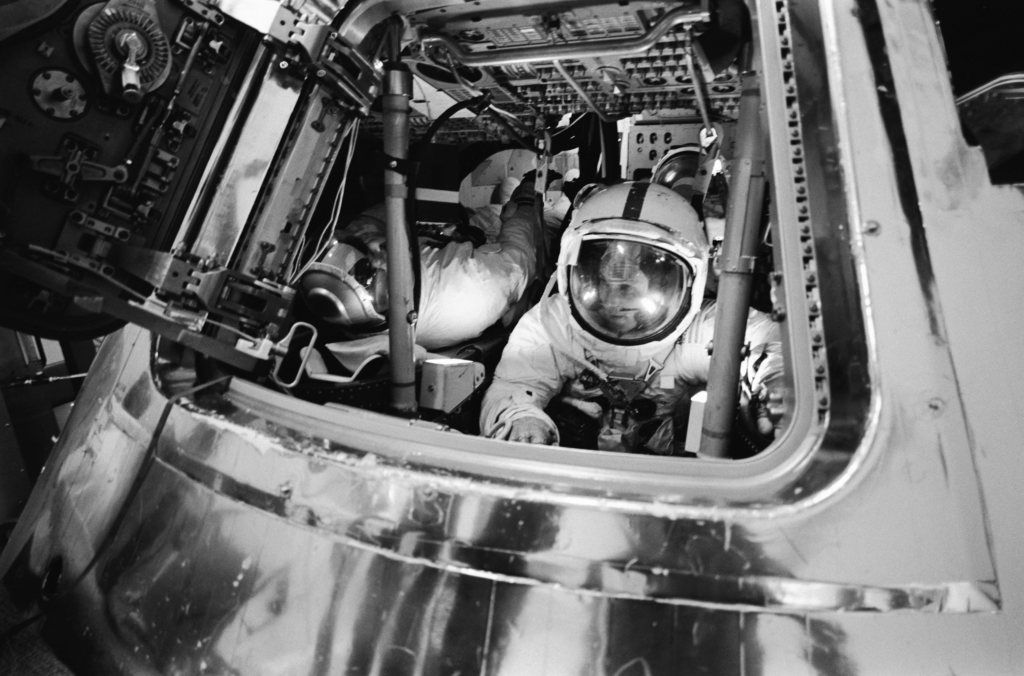

This is because of the flight controllers, and the Apollo 13 crew, were well-rehearsed through simulation. Before launch, simulators were used to define, test, and refine “mission rules”, the instructions that determined the actions of mission controllers and astronauts in critical mission situations. Among the many simulators, the command module simulators and lunar module simulators occupied 80 percent of the Apollo training time of 29,967 hours.

Flight director, Gene Kranz’s, White Mission Control Team (one of three) had 11 days of simulation training to prepare for the landing of Apollo 11, seven of those with the actual crew, and four with simulated astronauts. As well as training both teams, the purpose of the sessions was to define a set of “mission rules” that would define any actions taken by both the crew and mission control (these include “Go, No-go” decisions at critical stages of the spaceflight, and how decisions would be made in a crisis.

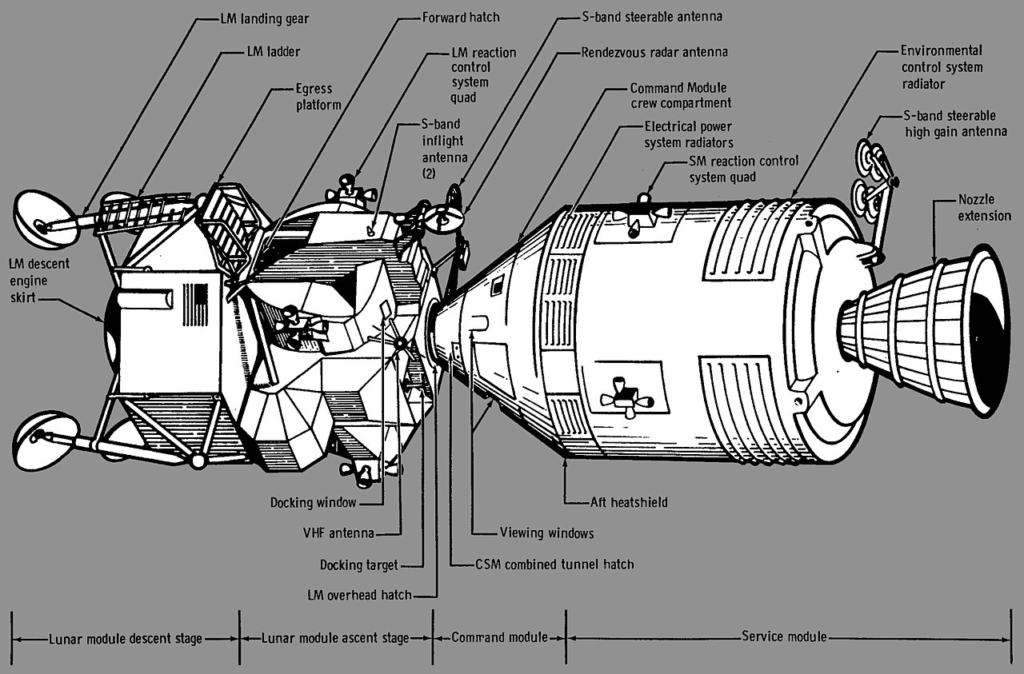

The various simulators were controlled by a network of digital computers, up to ten of them which could be networked together to simulate a single large problem. There were four computers for the command module simulator, and three for the lunar module simulator. The computers could communicate using 256 kilobytes words of common memory, where information needed throughout the simulation could be stored.

There are at least two examples of simulated scenarios that directly influenced the successful resolution of problems on the actual missions (although there are likely hundreds more).

In the final simulation of the Apollo 11 mission, controllers wrongly aborted during the final stages of lunar landing when the guidance computer issued a “1201 alarm code” that the controllers had never seen before. After quickly determining that the error was indicating a computer overload that meant it might not be keeping up with its computing tasks, mission control called for the abort. This was the wrong decision, after conferring with the Massachusetts Institute of Technology (MIT) team that programmed the computer, guidance officer (GUIDO), Steve Bales later concluded that a “1201 code” was a warning rather than a critical error and rewrote the mission rules just nine days before the eventual landing. If the final simulation hadn’t prompted him to do that, it’s likely the actual Apollo 11 landing, the final minutes of which were plagued by a series of 1201 and similar 1202 alarms, would also have been aborted. The Eagle would not have landed.

During preparations for Apollo 10, mission controllers were tested in a simulation that involved the failure of the spacecraft’s fuel cells as it approached lunar orbit, a scenario which is staggeringly similar to the Apollo 13 explosion. Controllers tried to evacuate the astronauts into the lunar module, using it as a “life raft”, but did not manage to get it powered up in time, killing the virtual crew. Although many NASA insiders rejected the multiple failures of that scenario as “unrealistic” it inspired the controllers involved to develop procedures that would allow the lunar module to be used as a lifeboat, even with a crippled command module.

Although the simulators did not play a key role in the design of the spacecraft, they did play an essential role in defining its operating parameters. This illustrates one of the key purposes of the digital twin: to test the asset, and its systems and procedures, over a wide range of possible operating conditions.

Connected twins

Most modern digital twins involve a remote physical asset that is connected to the digital model through a continuous stream of data. This connection is used to update the computer models in response to changes in the real-life object. Although Apollo 13 obviously didn’t use “the Internet of Things”, NASA did use state-of-the-art telecommunications technology to stay in touch with its spacecraft. That data was ultimately used to modify the simulators in order to reflect the condition of the crippled spacecraft.

In the audio transcripts of the Apollo 13 flight controller loops, most of the immediate discussion is about maintaining data connections with the spacecraft (such as executing roll maneuvers to better align the main antenna array with ground tracking). One of the unsung heroes of the incident was the Integrated Communications Officer (INCO), Gary Scott who calmly kept the data communications stream running, while almost everything else was falling apart.

Although for most people the Apollo 13 story begins with commander Jim Lovell’s ominous “Houston we have a problem” announcement (actually “Houston we’ve had a problem here”, originally uttered by Jack Swigert, and then repeated by Lovell), mission control was immediately aware that something had gone wrong through telemetry even before Lovell’s voice report (delayed by 3 seconds by the transmission distance), as the guidance officer calmly announces “We’ve had a hardware restart. I don’t know what it was”, and then seconds afterward the Electrical, environmental, and consumables manager (EECOM) controller announces, “we’ve got some instrumentation funnies, let me add them up…we may have had an instrumentation problem”.

In the first 15 minutes after the explosion, flight director Gene Kranz became wrongly convinced that the problems that they were observing were a symptom of communication problems caused by an antenna glitch that they had been trying to resolve earlier, and which had been a frequent problem in the previous Apollo missions. In his autobiography, Kranz berates himself for this and wasting critical time in pursuing the wrong scenario, although to any outsider he seems to be following a calm and rational decision-making process during a rapidly unfolding crisis.

Kranz says “In mission control, you can’t see, smell, or touch a crisis except through telemetry and the crew’s voice reports”. The crew trapped inside the command module could not see the damage, and other than the loud thud, which was not instantly recognized as an explosion, they also had to rely on the same telemetry as was being transmitted back to mission control. In the early stages of the crisis Kranz and the crew switch in and out different systems to try and work out what is working and what is broken.

Although the Apollo era data communications are crude by modern standards – they do highlight a common problem with modern digital twins: acquiring real-time data is one thing but processing that data into a form that can be easily used to make real-time decisions remains a challenge.

Even considering these limitations, mission control was able to quickly and accurately diagnose the problem and evacuate the astronauts into the lunar module before their oxygen supplies failed. They also made the sensible decision that the service module engine was damaged beyond repair. They were also able to use that data to modify their simulators to reflect the condition of the physical asset, another key quality of the digital twin.

Digital twin to the rescue

NASA faced many problems that all needed to be solved in order to bring the crew safely home, several of them solved only with the extensive use of the simulator digital twins.

One significant recurring problem was that of maneuvering the spacecraft, which was never designed to operate using the lunar module with crippled service and command modules attached for the whole return journey. With the guidance computer powered down to save energy, the crew had to manually align the spacecraft precisely to make three separate engine burns which required keeping the spacecraft on a free return trajectory to earth. In normal operation, these maneuvers were performed by the onboard computer, but in this unusual configuration, much of the work had to be performed manually. With limited fuel onboard, any mistake could have been terminal and left the vessel on a wrong trajectory.

The early signs were not promising, as the crew struggled to control the spacecraft “Why the hell are we maneuvering like this, are we still venting” exclaimed a frustrated Jim Lovell at one stage.

Mission control immediately dispatched the backup crew to practice the maneuvers on the simulators that were being modified to reflect the unusual spacecraft configuration, which involved reprogramming the mainframes with information about the new spacecraft mass, center-of-gravity, and engine thrust. Working together with the lunar module manufacturer, Northrop Grumman, the simulation team quickly worked out a new procedure in which the ship could be stabilized using autopilot and deploying the landing gear to get it out of the way of the descent engine. It worked and the crew was successfully able to perform the free return burn, greatly increasing their confidence.

A similar problem occurred two hours after Apollo 13’s closest approach to the moon (the so-called apocynthion or PC for short), intended to speed up the return voyage by 12 hours, and bring their return just within the lifetime of their battery supplies. The problem this time was that the lunar module’s guidance systems had been turned off to conserve power, forcing the astronauts to manually align the spacecraft using only visual cues. Ordinarily, the crew could have navigated using the stars as a backup, but the large amount of debris surrounding the crippled spacecraft made it impossible for the astronauts to identify any constellations. In desperation, NASA once again turned to the simulators. After hours of trial and error, the simulator team came up with the ad-hoc process to align the tiny window of the lunar module with a quadrant of the sun. Before making the complicated alignment maneuver commander, Jim Lovell sought repeated assurance from mission control: “Had the backup crew configured the simulator properly with the lunar module in docked configuration? Had they had any trouble performing the maneuver?”. He needn’t have worried. Using the rapidly assembled procedure Lovell managed to align the spacecraft with the one-degree margin of error required to keep it on course.

The final problem was that in order to use the lunar module as a life-raft, the astronauts had to power down the command module, which they would ultimately return to for reentry. However, the command module was only ever designed to be powered-up on the launch pad, a complex process that took over two days, and no procedures existed for restarting in deep space, with almost exhausted power supplies. Under normal operation, the lunar module drew about 70 amperes, however, which would have exhausted the limited battery supplies. To conserve precious power supplies, mission control had shut-down non-essential systems (including crew heaters) to reduce the load to less than 12 amps (less than a domestic vacuum cleaner). But even still, as the time approached to power-up the command module less than two hours of power remained.

Behind the scenes EECOM, John Aaron worked around the clock to define a minimal power-up sequence, which everyone hoped would awake the command module before re-entry with the trickle of power left in the batteries. The exhausted and frozen crew were understandably anxious about correctly working their way through a long and complicated procedure that involved operating hundreds of switches in the correct order. Any mistake, any omission, would likely prove fatal, as turning the wrong system on would instantly deplete the small amount of remaining power. Astronaut, Ken Mattingly (who was bumped from the Apollo 13 crew a few days before launch, sealed himself in a dark command module simulator to rehearse and refine the switch on sequence before it was broadcast to the crew.

Is that really a digital twin?

I don’t think it’s much of a spoiler to tell you that, thanks to the round the clockwork of hundreds of NASA engineers and controllers, the three astronauts were returned safely home. What might have been NASA’s greatest disaster turned out to be its greatest triumph. Although the Apollo 13 mission happened 32 years before the coining of the term “digital twin”, I do think that it remains one of the best real-life examples of a digital twin in action. I don’t think the astronauts would have made it safely home without it.

Here are the characteristics of the Apollo simulators that I think defines them as perfect examples of a digital twin in action:

- Physical: Digital twins are most useful when they relate to physical assets that are (at least temporarily) out of reach of direct human intervention. Even though there were three astronauts on-board, Apollo 13 is a perfect example of this.

- Connected: Digital twins require constant feedback of data from the physical asset that can be used to update their condition, and which is used to inform engineering decisions, which is a key requirement of a digital twin. Modern digital twins typically use “the Internet of Things” to achieve this aim, NASA achieved the same purpose with advanced telecommunications which included two-way data transfer.

- Adaptable: Digital twins need to be flexible enough to react to changes in the physical asset. NASA was able to reconfigure their simulators in a matter of hours to reflect a configuration that had never been envisaged during their design and use those simulations to provide critical information to the crew.

- Threaded: There wasn’t a single “digital twin” for the Apollo program; NASA used 15 different simulators to master the various aspects of the mission. The conception that modern digital twins need to be based around a single “grand unified model” that predicts every aspect of the physical device is also false. Contemporary digital twins consist of multiple interacting models that can be combined to account for different aspects of performance.

- Responsive: The events of Apollo 13 played out over just 3 and a half days, during which an incredible amount of adaptation and re-engineering occurred. I doubt whether many contemporary digital twins could have been deployed so quickly after critical damage to their physical assets.

Finally, if you are wondering what Siemens had to do with all of this: we made the incandescent lamps that illuminated the instrument panels of Apollo 13 with a magical green light. Apparently, the lamps consumed almost no electricity, which is useful when you are stuck on a broken spacecraft 210,000 miles from home.

Credits: NASA

Comments

Comments are closed.

Wonderful…