AI Foundations: The path to convolutional neural networks

Understanding the building blocks of artificial intelligence.

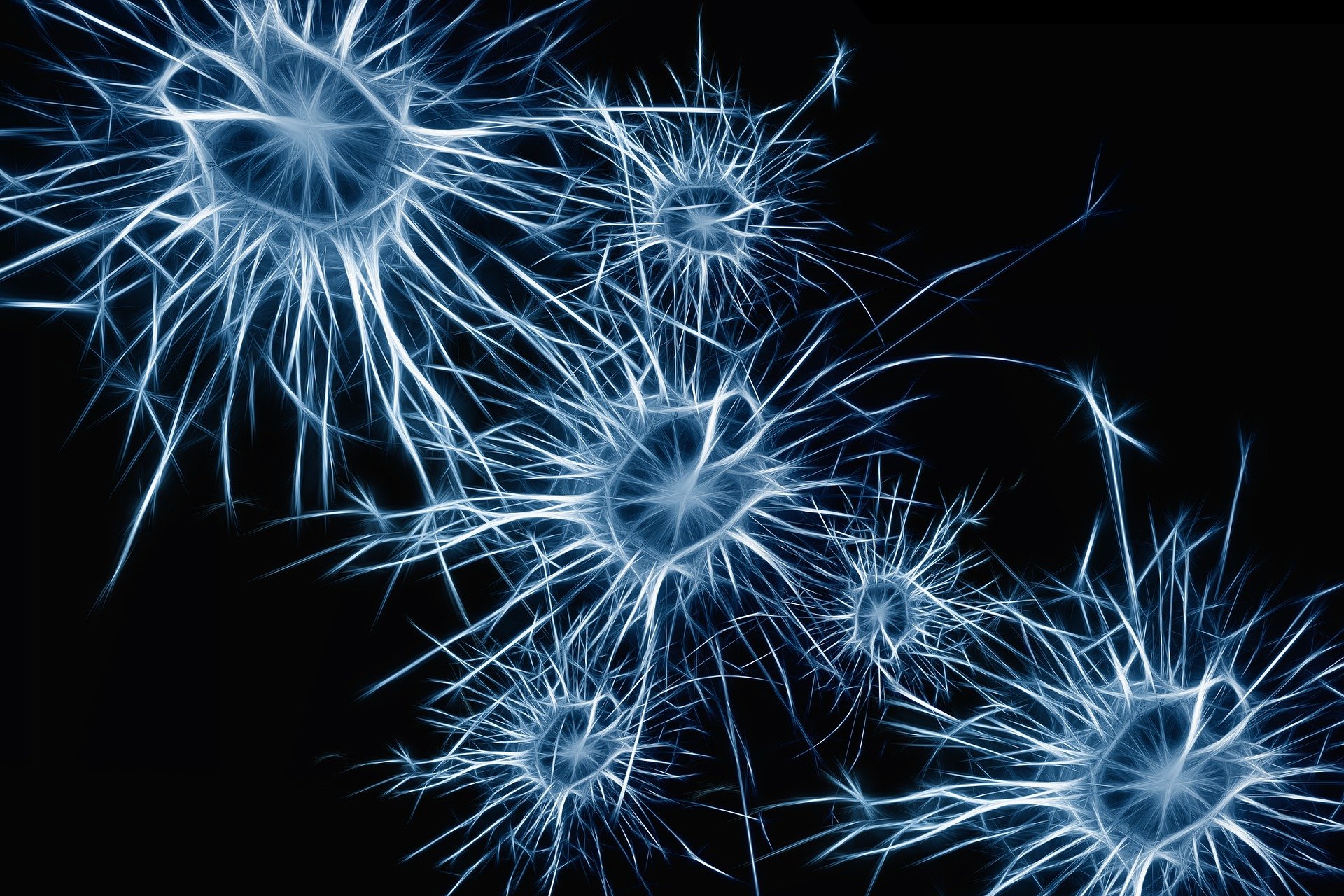

For many decades, thousands of scientists, doctors, and engineers have been studying how the human brain works with the goal to artificially replicate intelligence. They categorize artificial intelligence (AI) as either weak or strong AI. Weak AI is focused on a single task, while strong AI is a general intelligence that actually can understand, plan, and make decisions about anything. Today, we are just getting good at weak AI with dreams of strong AI for tomorrow. But, how did we get where we are today? While many teams of people toiled away and provided breakthroughs, it is the path to the convolutional neural network (CNN) that is most interesting.

In 1943, a paper was published by Warren McCulloch and Walter Pitts, theorizing that brain functions based around neurons and synapses could be modeled using math and logic operators (AND, OR, and NOT) to construct a network that could respond to input information in order to learn. If robust software languages and powerful computers were available at the time, this would have been the event that lit the fuse on AI. Alas, it was too early.

In 1957 a computer program called the Perceptron was created to model a single layer neural network to compare images to learn a targeted item (like a cat). It had 2 inputs and one output to mathematically determine if a match was true or false. It accomplished this by updating its linear boundary based on the input images.

The perceptron “draws” a line across a two dimensional space. Inputs on one side of the line are classified in one category and the inputs on the other side of the line are classified as another category. But, in 1969, the influential AI pioneer Marvin Minsky co-wrote a book attacking the perceptron, stating that it could not learn the exclusive OR (XOR) logic function in a single layer. The XOR function returns a value of 1 if either of the two inputs (but not both) are a 1. A single layer perceptron cannot “draw” a straight line to separate input points of (0,0) and (1,1) from the points (0,1) and (1,0). Unfortunately, many in the AI community thought this meant that the neural network was a bad idea. However, Minsky and others knew that multi-layer neural networks could perform the XOR function because they form a hyperplane category space in which a straight line can be drawn to separate the input points. Due to this misinterpretation and a confluence events like a bad economy and reduced government funding, an AI winter occurred. Projects halted, a search for new theories started, and people actually changed careers, exiting the AI domain.

In the 1980s, people flirted with expert systems instead. These systems use an if-then-else rules database to answer questions. A medical expert system could ask questions to attempt to isolate a condition. For example: Does the patient have a fever? If “Yes”, then ask another question and continue questioning until the pattern of symptoms match a known medical problem. The system could then reference the treatment to the proposed condition, when it hit the bottom of the rules tree. However, expert systems could only focus on particular areas and the number of rules exploded to the point of overwhelming the technology of the day. These systems were hard to test and they did not actually learn, meaning that rule database updates were constantly needed. But, while this path wound down, researchers were quietly advancing the neural network concept. Winter turned to summer.

In 1986, Geoffery Hinton co-authored a paper that explained the concept of back-propagation that showed how feeding back information about errors into the neural network increased the ability to learn. Neuroscientists had theorized this phenomenon within the human brain – information travels through a hierarchy of neurons and back-propagates error information when patterns did not match as expected. This kicked off an explosion of ideas around synthetic neural networks.

By 1998 professor Yann LeCun described the first convolutional neural network that included back-propagation. However, the AI community failed to immediately grasp the ramifications of this significant idea. But, by 2010 the concept of deep learning was inspiring many researchers to revisit the CNN. Deep learning is the idea of processing huge amounts of data to find patterns or unexpected relationships. “Deep” refers to the large number of layers in the neural network.

In 2010, Fei-Fei Li employed Amazon’s Mechanical Turk system to crowdsource the manual labeling of millions of images with associated nouns. This resulted in ImageNet. She challenged academics to a contest that used this database to exercise their deep learning algorithms and to see whose solution recognized images most accurately. Each year, teams got closer and closer to 100% accuracy in identifying images. As of 2019, the error rate was about 2% which experts in cognitive science say is better than humans would do given the same set of images. Li went on to become AI chief scientist at Google.

At this point, all the ingredients where available to build a CNN in software that could actually perform a specific task, like recognizing a limited set of objects. With the Internet providing infrastructure and massive datasets, combined with the power of computers growing exponentially, researchers could finally experiment, build, and train large neural networks. Between 2010 and today, researchers continue to refine deep leaning by inventing new optimizations, acceleration techniques, and new neural net architectures.

Along the way, product teams at various companies started to think about making money using CNNs. They worked to implement CNN software solutions in hardware so that products or product features could roll out to the marketplace. But remember, these CNN-based systems are considered weak AI, as they are only focused on one task, such as speech or pattern recognition. Weak AI is a far cry from actual intelligence.

Next time on AI Foundations, I examine mapping neurons to a model.