Applying computer vision in automated factories – the transcript

Siemens is well-recognized as a leader in factory automation and machine learning projects and tools are in abundance for design, realization, and optimization of equipment that efficiently manufactures products around the world. I wanted to learn more about how we bring computer vision technology together with AI to benefit factory equipment design. So, I sat down with Shahar Zuler, a data scientist and machine learning engineer at Siemens Digital Industries Software to discuss her work. Listen to the podcast here. But, for those that prefer reading versus listening, here is the transcript from that podcast:

[00:00:00] Thomas Dewey: Hello, and welcome to AI Spectrum where we discuss an entire range of artificial intelligence topics. I’m Thomas Dewey, your host. And in this series, we’re talking to experts across Siemens that apply AI technology to their products. Today, we are happy to have with us Shahar Zuler, who is a Data Scientist and Machine Learning Engineer at Siemens, to discuss object recognition in factories. Welcome, Shahar.

[00:00:24] Shahar Zuler: Hi, thank you. I’m very excited to be here.

[00:00:29] Thomas Dewey: So, before we get into your projects, if you could tell us what inspired your personal interest in AI?

[00:00:34] Shahar Zuler: Sure. I did my BS in Mechanical Engineering. I was working at the same time that I did my studies, and in the fourth year of university, almost by chance, I participated in a course about the most basic parts of Machine Learning. For the first time I found a subject which I was really, really interested in, in a way that I wanted to read more about it and have my side projects on that. And by that time, I was already working as a Mechanical Engineer and I understood that this wasn’t that interesting for me when I compared it to this field of Machine Learning. So, I decided to do a twist and focus more on Machine Learning and really switched my domain. It was, I think, a great decision for me because now I deal with Machine Learning, I really enjoy the work every day. Actually, there was, in this course, one specific video that the professor showed that really brought me into the subject of Machine Learning. He was showing research about a bunch of robots that were designed in the shape of some animals, but no one actually programmed them to know how to do anything. So, for example, they had one robo-spider, that’s like a robot in the shape of a spider, but no one actually coded it on how to progress. And what they did, they used a branch in Machine Learning which is called reinforcement learning, to actually train this robot by trial and error to understand how to walk by itself. So, you can see it slowly progressing and trying different stuff until it succeeds to walk. And that really caught me, I mean, I really thought at this moment that this is the most interesting stuff I had in university until that moment, and I think that was the moment when I really decided I should focus on it.

[00:02:35] Thomas Dewey: Well, that’s great – almost accidentally, while you were in school. I’ve actually seen that video more in the context of not using programmable languages for AI but letting the machine learn. The robot angle probably got you interested in the industry and factories. So, how do you see the effects of AI for the industrial industry playing out?

[00:02:58] Shahar Zuler: Generally, we can see there is big progress in Machine Learning algorithms, and specifically in vision-based Machine Learning algorithms during the last years. So, the technology actually matures more and more and you can see that stuff which was only for academia a few years ago is slowly going into the industry. The technology itself, the algorithms themselves, just become better and better constantly.

Another thing is that industrial edge devices or really strong compute power machines are cheaper and stronger. So, in order to run both training and use it later on just for inferencing – just making the decision at the moment of test time – you need strong machines for that. They used to cost a lot of money and they used to be a lot weaker, but today they are stronger and stronger, and really more affordable. And also when you look at sensors, such as spatial cameras, which have also the depth channel input in them or other types of sensors, they are becoming more and more affordable too. So, actually, you can take your shop floor, your factory, and your robotic line and just put many sensors in it very easily, and you can use the data captured from these sensors as input into the Machine Learning model and then make all sorts of decisions.

Those two, I think, are the main enablers, I would say, for the industry to use AI more and more. And also, there is a higher need because devices are being manufactured or created in smaller and smaller batches, and there is a higher entire manufacturing variance. So, up to five years ago, or maybe more, you would, for example, switch your smartphone, buy a new smartphone every three or four years, maybe even more. And today, you don’t do that anymore because the technology just evolves faster. So, maybe you switch to a smartphone or you replace it every one or two years. So, in the same way, the batches in the factories are smaller and smaller, and products are being replaced faster. So, this demands the robotic programs to be more easily adjustable. If you want a robot to assemble your smartphone or to manufacture something in your smartphone, it should be quicker to adjust to new changes. And Machine Learning can actually deal with those types of problems very well. So, I think that’s the other side besides enabling the AI for this market, there’s also a stronger need for this environment. So, I guess those are more or less the effects of AI in the industry currently, as I see it.

[00:05:55] Thomas Dewey: You came up with some examples, but do you have some other examples, specifically to how Machine Learning is used in factories today?

[00:06:03] Shahar Zuler: Factories today already started to use Machine Learning to perform many tasks. In my every day I deal more with, let’s say vision-based tasks – tasks which are based on input from a camera – but there are also many others. So, for example, you can look at robotic kitting, sorting, and picking. You have some kind of unstructured environment, let’s say, some stuff being thrown in some bin or some box, and you want a robot to be able to look at it with a camera, understand what it sees and understand how it can pick the parts and then place them somewhere in order to sort them or to make small kits or something. So, that’s like one branch of examples. But you can also use AI in order to perform some quality tests on parts, or on assemblies or packages. So, for example, if you have a manual or a robotic line where you create different packages, then just right before you wrap everything in a box and you send it away, you can have a camera to look at the package before and make sure that no parts are missing, and thus avoid recalls or faulty packaging. You can also use Machine Learning for throughput analysis and bottleneck detection. So, in your line, again, whether it’s manual or robotic, you can use cameras in order to understand where your bottlenecks are, and then make decisions based on that; maybe just redesign or to adjust your shop floor in order to have a faster cycle time. So, that means that you can do whatever your robotic line needs to do, just quicker.

[00:07:51] Shahar Zuler: And of course, you can also use Machine Learning today for shop-floor safety. So, many times you have dangerous or heavy machinery inside your shop floor, and you can use cameras in order to make sure that people don’t get too close to danger zones, and if they do, you can actually use the input from the camera in order to automatically stop the dangerous machine from working. So, those are, I think, examples which are already pretty much used in the industries. But you can also look at pioneering companies, which already started doing robotic assembly. So, robotic assembly is pretty complicated to do because you need to do some real delicate movements with the robots. And if you want to do it based on Machine Learning, you really need a lot of training data and lots of pretty sophisticated algorithms to understand, for example, how to wire something or how to connect to electrical boards on one another – this is very delicate work. But we already start to see some companies having the first steps in the actual industry for that. That’s actually very exciting that, really, you can see it, again, coming out from the academy to the industry. Before Machine Learning, people already used computer vision algorithms; we often refer to them as classical algorithms. So, they are here for decades already. You could have compared the pixels and look for specific patterns of pixels in your images in the way that someone programmed them. But those algorithms, they work very well on specific or on some use cases but once you insert some variance with how things look, or maybe if the camera moved a bit from where it was supposed to be, or different lighting condition, for example, so then those algorithms tend to sometimes fail. And when you use Machine Learning algorithms, they can actually generalize better because you don’t explicitly code the pattern of the pixels that you’re expecting but just showing lots of very high variance data to the algorithm, and the algorithm understands by itself how to generalize and how to come to different conclusions. I think the fact that we already had some classical algorithms at the shop floors until now actually helped AI and Machine Learning algorithms to approach this industry too because they already had the use cases for them, they just need the improvement and the ability to generalize and to be more robust to changes.

[00:10:36] Thomas Dewey: It sounds like your specialty is Object Recognition. And I’d like to know, the industrial environment; it probably has some real challenges for employing AI and ML kind of solution. So, what are the unique challenges of the factory floor?

[00:10:57] Shahar Zuler: Maybe just a moment before the challenges, we actually described the tasks from the use case perspective. But if we want to look at it from the Machine Learning perspective, we generally divide it into four different tasks in the context of Computer Vision. Though you could actually divide it into even more and more tasks, but for me, there are four major ones.

- The most basic one is Object Recognition. In object recognition, your image mainly has one object, and the Machine Learning model should actually recognize just what it is. So, for example, if you have an automated recycling facility, and you have a conveyor just bringing you a new item every time, maybe your Machine Learning algorithm should understand whether this parcel is made out of plastic or paper. So, that’s just recognizing one label per image. So, that I would say is the easiest task from a Computer Vision-Machine Learning aspect.

- The second one is called Object Detection. So, in Object Detection, you can have a number of objects within your image, and the Machine Learning model should identify them all, and then it should estimate a tight bounding box around each one of the instances and be able to tell what it is. So, for example, if you have a packaging quality inspection, where you use a camera to verify that your package is whole before you close it and send it, then you can use the Machine Learning algorithm in order to verify that you have, for example, three screws, three nuts, and four rods or whatever. So, you could use an object detection algorithm in order to identify all of them and make sure that they are all there in the correct place.

- The third type of task is Segmentation. You can have either Semantic Segmentation or Instance Segmentation. And in these cases, the Machine Learning model provides estimation at a pixel level. So, given an image, the model tells you, for each pixel, which object it belongs to. This information can help you extract the object contours, for example. When you’re using the segmentation information, you can automatically, for example, decide on grasping robotic poses or even support an autonomous guided vehicle’s navigation. So, if you have a small vehicle, which is an autonomous vehicle, that’s driving in your warehouse and they’re bringing some items from one place to another, they should identify where its route is, where it has shelves, etc. You can use segmentation at the pixel level for that. I would say that these three main types – that’s Object Recognition, Object Detection, and Segmentation – are kind of solved already. So, I mean, if you are an engineer who knows how to deal with Machine Learning and you have enough data, you can probably try to use those algorithms without major issues. The more complicated the task is, you will need more data, but generally, those are kind of solved and working already.

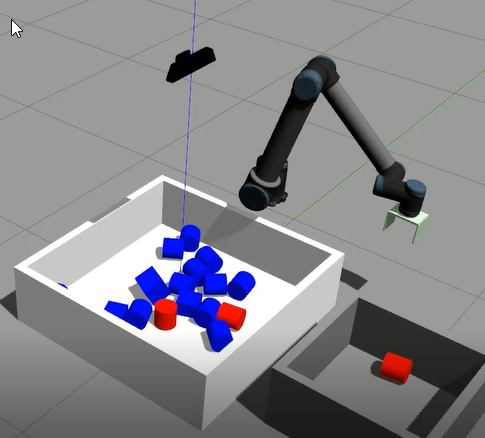

[00:14:22] Shahar Zuler: Now, the fourth one is a bit more complicated, and it’s called the 6D Pose Estimation. So, in this case, for each object in the image, the model will detect its position and rotation. That’s three dimensions for the position and three dimensions for the rotation of the object. So, that’s the exact location of the object in the entire world relative to the camera, let’s say. This model can also be beneficial if you’re aiming for autonomous robotic grasping. So, once you have, again, a bin with many, many parts in it, the moment you understand how each one of the parts is oriented, you can calculate more easily how you can grasp it using some gripper. This problem is actually solved today for some cases but they still have these challenges and competition every year in order to improve those results. So, currently, I think this one is the most complicated one, which is already employed in shop floors, and this can be very beneficial if you’re aiming for bin picking. Now, bin picking, I think, is kind of the Holy Grail currently in this industry. So, you have a bin and it’s full of parts, and you don’t know how many parts you have there and how they’re really going to be ordered. You should just use a robot in order to take those parts and put them somewhere for the next step in the robotic line. So, those are kind of the main tasks from the eyes of the, let’s say, Computer Vision Engineer or the Data Scientist.

[00:16:07] Shahar Zuler: Back to your question from before, the challenge in those cases is usually the data; you need a lot of data in order to train those algorithms. And just imagine that in order to collect data, for this bin-picking scenario, you have to stop your robotic line which actually costs money because when you stop it, then nothing is working and the line is not producing or doing what it should do. So, you need to stop the line and start taking images with very, very high variance. You need to take hundreds or thousands of images with different orders of the parts, and maybe different angles and different lighting positions. After you finished doing all that, you should manually label all the images. So, if you’re doing an Object Detection task, you need to put a bounding box manually around each part of each image. And if you’re labeling for an Image Segmentation task, then you should color each pixel of the image in the color that you specify, which belongs to each object. So, you have to manually label this data in order to, later on, be able to train the algorithm. And you can imagine that this work, besides being pretty expensive, it’s also very tedious and it’s very error-prone when it’s done by humans. That’s often a real big challenge or an actual barrier for people to employ Machine Learning models in their shop floor.

And there is also another challenge which comes at a later phase. So, let’s say you finish training your model and now you want to test its performance. So, again, you should stop everything that’s happening in this robotic station, then you need to deploy your model. And then you need to start inserting parts in many, many edge cases in order to make sure to measure the performance of your algorithm. And after that, let’s say that you’re doing bin picking, you’ve got to make sure that once the Machine Learning model recommends some, let’s say, grasping position that the robot can actually get to that position, that it can reach there, and that it can reach there without colliding the bin or colliding itself. And after we grasp it, you need to make sure that it can place it in the correct place. And if you want to know how much time it will take you to empty a bin with 10 parts, or 100 parts, or 1000 parts, you need to perform this over and over many, many times. So, this can be also pretty expensive. And I think those two challenges are very noticeable; both of them can be actual barriers for Machine Learning projects. They just make everything a lot more expensive and a lot more complicated.

[00:19:04] Thomas Dewey: I’ve heard the grasping problem is one of the hardest problems to solve. Even though it seems so simple, people wouldn’t understand how hard that is.

[00:19:15] Shahar Zuler: Yeah, you should actually employ a logic where maybe like a three-year-old could employ but for a computer, it’s actually a lot harder to understand and to react.

[00:19:28] Thomas Dewey: So, basically we’ve set up the challenges. Let’s get into your actual work at Siemens, and what you’re doing to help solve some of these challenges.

[00:19:37] Shahar Zuler: A major way to improve this problem with not having enough data is simply using synthetic data. When I’m referring to synthetic data, I’m talking about images taken from a 3D simulation by a virtual camera that’s located in the simulation. So, just imagine a cartoon or something that’s been created very well, like a 3D movie that’s rendered and wasn’t actually filmed before. You can do kind of the same thing for industrial use cases; you create a 3D simulation, and then you use a virtual camera in order to take images. And not only that in this way you can take many, many images with very high variance so that they will look very, very different from each other, you can also do the annotation process because when you create a 3D simulation, you know exactly – or the computer knows exactly – where every object is located. So, the annotation process can be automated by 100%. Actually, instead of taking thousands of real images, you can create thousands synthetic images and only take a small amount of real images and annotate them from the actual shop floor. And then you can perform something that we call in the Machine Learning world, Transfer Learning or Fine-tuning. So, after a model is trained on synthetic data, you can now take the real data and just use it in order to adjust the Machine Learning model parameters slightly in the direction of real data, because the synthetic data doesn’t look exactly the same as real data. This fine-tuning process actually makes the model capable to reach the same performance and the same metrics with up to 90% less data than what you would usually take – that’s pretty amazing.

[00:21:46] Shahar Zuler: In Siemens, we’re in a unique position because we have the 3D data and the intimate acquaintance with the shop floor process. So it’s obvious that we will leverage synthetic data in order to help our customers train the Machine Learning models more easily. This is one main direction that my team, and me specifically, are working on the last year. The feedback from users is the synthetic data generally really helps them reduce the time they need in order to train models and just do those quicker checkups with new use cases. So, sometimes you really want to check if you can do something very quickly before you actually decide to do a project. When you’re using synthetic data, you can reduce the time by 90% and really focus more on the engineering work and less on just the tedious work of taking images and labeling them. And just maybe another side note is that the more realistic the synthetic data is, the better the Machine Learning model would be based on it. So, we’re actually actively working on making our synthetic data more and more realistic so that the user will need to use less and less real images when they combine them with our synthetic data.

[00:23:13] Thomas Dewey: That’s great. Usually, AI always boils down to data. And that’s usually the problem everybody has. So, for this synthetic data, what do you do to validate that that data is correct?

[00:23:26] Shahar Zuler: As I said before, we spotted two main challenges; one was how hard it is to get enough data, and the other one was how to validate your model. So, in order to get enough data, as I said, we use synthetic data in order to help ease the training of the model. But in order to validate the trained model and how it connects to your robotic software, we actually enable to connect your trained model – instead of doing it with the shop floor, we’re connecting it with its digital twin; that’s the simulation environment. You can actually connect the model to your simulation environment, make inferences or use the Machine Learning model in order to make predictions, and then tell the robot to actually go and pick those parts, or make any decision based on the Machine Learning model in the simulation environment. And there, you can perform this process as many times as you want, with many, many randomized initial positions. And you can collect statistics and you can know your average cycle time better. After you finish doing these analytics, and maybe you have many iterations of these analytics, then you take your trained model to the shop floor and actually perform a lot less real physical checkups with this trained model. So, this removes another barrier for customers that they can actually very thoroughly check their trained model with minimal interference of the physical robotic line.

[00:25:08] Thomas Dewey: So, you’re working with customers that have factories, they have lots of equipment. It sounds like partnerships are really important to solve these challenges.

[00:25:19] Shahar Zuler: Yeah, definitely. Actually, we’ve discovered that this approach of partnership with both small startups and larger companies is really beneficial for us, of course, but also for our users, for our customers. Because many times you can see those small startups or medium startups who have expertise in one specific thing, which they can do very well. So, many times, they have experts from academia and experts from the industry, and also have a very good familiarity with the real specific industry or the market itself. And they learn how to solve specific problems very well. And when we combine their solutions with our simulation solutions, we actually get a synergy; we’re getting to a place where we improve both their product and our product. And in the end, our users are more prone to use these solutions because it’s easier for them to transfer between, for example, the industrial robotic simulation, and some framework in order to train Machine Learning to employ already-ready Machine Learning algorithms. So, yeah, this is very beneficial for us. We are working very hard in order to synergize and partner up with many types of companies. And in this way, we also learn ourselves within those domains. We gain the knowledge ourselves, and the next time we will encounter a new use case, then we will know how to approach it better, and we will know which startups we can approach in order to partner up for this.

[00:27:04] Thomas Dewey: Based on what you’ve seen, can you predict what you think is going to happen with Machine Learning in the next five to 10 years?

[00:27:12] Shahar Zuler: Well, I don’t know if I can predict it. But I have a gut feeling, which is based on people I speak with, and all of these publications and blog posts that I read of where stuff might be going. So, first of all, I think that one thing is pretty clear, industrial edge devices which can run AI inference are becoming better and better. It means that it will keep on being more economical or more affordable to smaller and smaller factories, and they can also contain models which are more complex because if you have a model, which is very, very complex, it needs high computation power in order to work. So, the progress in the edge devices area is really mind-blowing, I would say, and I definitely think that this will help employ or democratize more and more AI and Machine Learning models in a factory floor. Now, besides that, I think there will also be the democratization process of the technology itself from the algorithmic side. Up until a few years ago, if you would just take an engineer, which is not like a doctor in Computer Vision, it would be maybe very hard for them to employ Image Recognition or Object Detection tasks. But since it came out from academia to the industry, it became easier and easier for people with really basic Computer Vision or Machine Learning skills to employ pretty complex algorithms. Because there are frameworks that make it a lot easier today, and you also have more and more publicly open datasets that you can use in order to train them. So, that’s why I think that this will become wider and wider in the industry.

[00:29:15] Shahar Zuler: Another thing which I don’t know if it will happen in five years, I hope it will happen in five to 10 years, is the branch of reinforcement learning, which actually I find fascinating. I hope it will mature more and more in order to be employed in the industry. Up until now, I heard two companies actually say that they’re using Machine Learning on the shop floor of their manufacturing solutions. So, I really hope that this will emerge more and more. Today, it’s more in the world of academia; they are making major progress in the reinforcement learning branch, it helps robotic assembly tasks become better and better for unseen objects, that you can just use a robot to look at some kit of something to assemble, and the robot will understand by itself how to do it and will actually be able to do it. Again, I really hope that this field of reinforcement learning will be developed and more mature in order to be employed in the industry.

[00:30:36] Thomas Dewey: I think your observation about moving from academia to the mainstream to where just the average person or a customer or a factory could create a solution; it very much parallels software development, to where we’ve gotten to the point where we have low or no-code software development. And I’ve seen some startups in the AI area, looking at low code, no code. Once that happens, you know you really have a mainstream thing that everybody can use. Thanks so much for your time. I’ve learned a lot about Machine Learning on the factory floor. And I’m sure our listeners have too. So, thanks for joining us.

[00:31:11] Shahar Zuler: Thanks for having me. I had a great time. Thank you.