The Role of Machine Learning in IC Verification – Transcript

In a recent podcast, I spoke to an expert from Siemens EDA about how machine learning is a vital component in the IC verification process, check out a transcript of our conversation below or click here to listen to the full episode

Spencer: Hello and welcome to the AI Spectrum podcast. I’m your host, Spencer Acain. In this series, we talked to experts all across Siemens about a wide range of AI topics and how they are applied to different technologies. Today I am joined by an expert from Siemens EDA with more than 20 years experience to discuss the ways Tessent uses artificial intelligence and machine learning to detect defects and improve yields in microchips. So can you tell us how about the relationship between EDA and semiconductor design and artificial intelligence or machine learning?

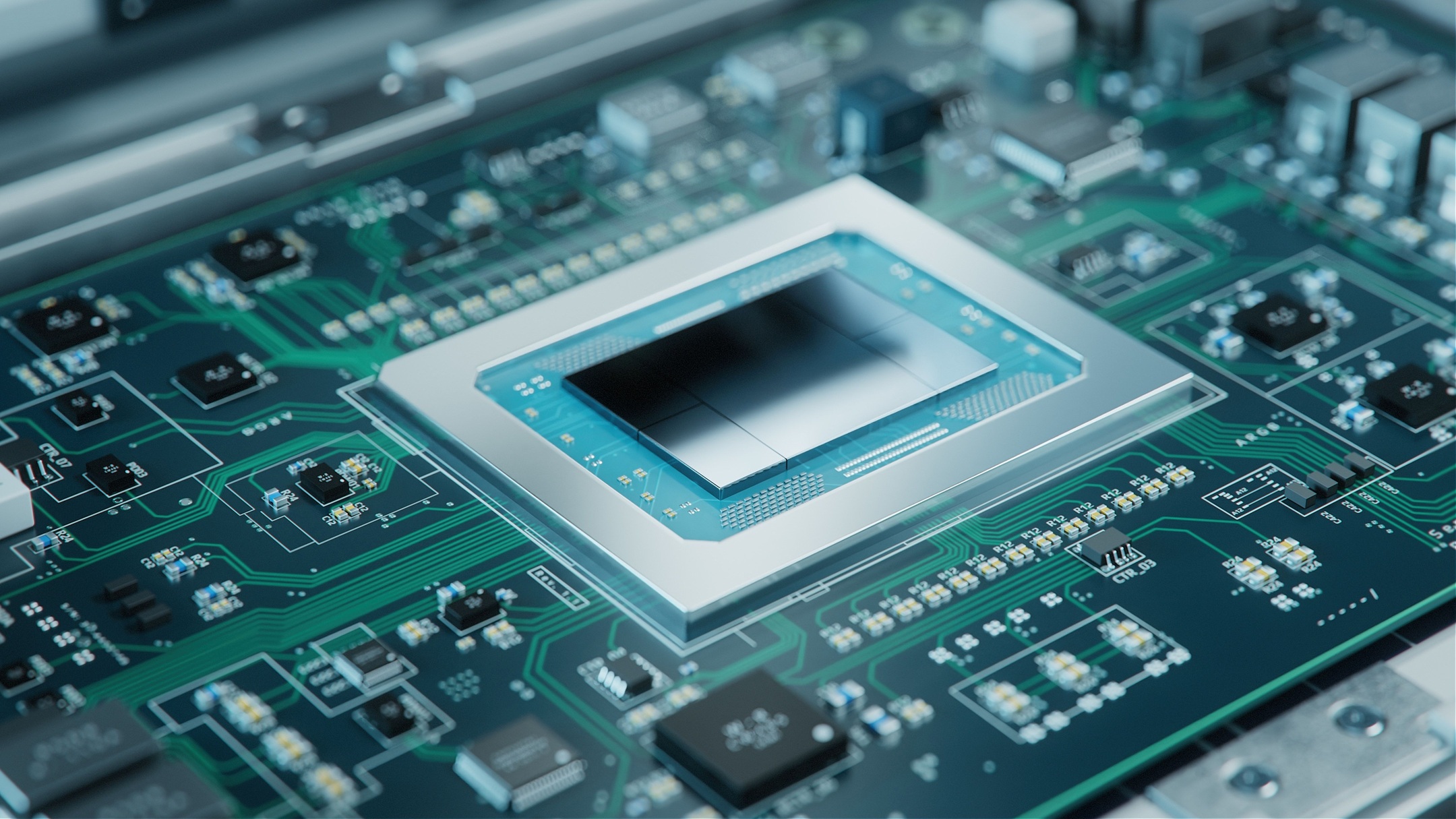

Matt: Sure, it’s a little bit of a long story, but let me start with just talking about the semiconductor industry and some of the complexities that we have to deal with. So if you look at a typical semiconductor chip today, this is a chip that’s probably in your cell phone. It probably in your pocket. You know it’s the size of a thumbnail but it’s got 8.5 billion transistors. These are these little switches of ones and zeros that help keep us all connected. Well, these chips are the most complex things ever made by mankind. They really are. Yet they have to be tested, and they have to be 100% performative so that you can make your phone calls, watch your videos and do your social media. Now in reality it’s very, very difficult to test every one of those 8.5 billion transistors. As you might imagine.

Spencer: I can imagine. Yeah.

Matt: Because you have to do it quickly and you have to do it at very fast and have a low cost. Because of course you don’t want your cell phone to die on you. You don’t want it to last more or less than a week, right? You want to last couple of years at least.

Spencer: Of course.

Matt: So our group does is we make software that inserts circuitry into that chip so that it’s easier to test. And so that I can test it. You test it at a high level of quality at low cost. And so far I haven’t talked about the machine learning cause it gets, the machine learning is really useful to us when we talk about yield and trying to understand the chips that actually fail those tests. The thing about the failed devices is that as they ramp a new process technology in one of these foundries, you may have heard it recently in the news. People are wanting to build foundries all over the world, not just in in certain parts of the world. So we have an effective supply chain. Well, it’s very difficult to get all those 8.5 billion transistors to yield and have billions and billions of those chips come out. That getting that high yield, of course, maps directly into the cost of these chips, cause if you if you make one of these chips in a foundry or a factory, if you make a wafer full of them, these are these round discs where they manufacture the chips. Umm, you know, if you have less than you know 99% yield or something, every percentage of yield can cost billions of dollars to the, to those chip makers. So it’s very important from a cost perspective and from just from a consumer perspective even the automobiles. Now today they require computer chips. And so if you don’t have computer chips, you can’t even get a new car today. So doing those tests effectively is important but understanding how to get high yields I believe is even more important.

Spencer: And so is that where AI is coming in or machine learning those high yields?

Matt: Yeah. So in the in the test process, some of those chips fail and there’s a process called logic diagnosis where you take the failure data, the kind of fail logged if you will off this test equipment and you can combine it with design information and through some simulation processes you can figure out where those defects were in the chip and these you can think of it very simply like these are just wires, tiny tiny wires. Some of them are broken and some of them are shorted together and these cause failures in the die. The challenge is the diagnosis result is not is not perfect, meaning there’s some ambiguity in that. And so I came up with a metaphor: think of it like a forest. So imagine if you had a very dense forest of billions of trees and there are only three lumberjacks, OK, and those lumberjacks are out there. They’ve got their axes. One guys chop and you hear one chop. Then you hear another chop. It’s coming from roughly the same area, but this is a big forest, right? So you don’t know exactly where it’s coming from. Then the third lumberjack chops. Maybe you hear two chops at a time. Maybe it’s two or three at a time. It’s very hard to figure out where that chop is coming from in such a big forest, right? And that’s really what we want to do. So instead of, you know, seeing the forest for the trees, we want to see those trees because that’s where the defects are.

Spencer: Of course, yeah.

Matt: So it’s really an impossible problem for one person to figure out with their simple ears. So machine learning what it does we take a population. So we say let’s look at hundreds of identical forests and listen to all those chops, and let’s let the machine learning figure out where those chops are coming from. Let’s let the machine learning figure out what those distributions are, if you will. And so that’s kind of my analogy of the machine learning then telling us, you know, here is probably the most likely part of the forest where lumberjack one is. And here’s the most likely part of the forest where Lumberjack two is.

Matt: That make any sense?

Spencer: Yeah, yeah, I think I get it now. So it’s kind of like. Because there’s kind of a lot of noise almost. There’s kind of it’s all overlapping. You need a way to kind of differentiate once you actually have your failure data you to say, where did this failure? Where is this coming from? Or I guess in your analogy here is like, where is my lumberjack hitting trees? Where is he chopping down trees in this huge huge forest?

Matt: And you’ve got things echoing around and. And Spencer, you came up with actually the exact word for it. It’s noise and there’s diagnosis noise in that data. And so now in the chip, what that means is for each one of the defects, there’s a forest of potential trees or potential wires. It could be coming from in that what we call a cone of logic. It’s just not knowable because all of the paths, all these wires, many of them are equivalent, right. So you can’t really tell electrically. And so that’s where the machine learning comes in. It’s analyzing these different signals from the diagnosis data to figure out what’s the real root cause of that failure, because I wanna know that so I can fix it.

Spencer: Well, that makes sense. But is this something you could do with without machine learning? Like can you do you have to use machine learning? Because there’s just, you know, such a volume of data and it’s so so difficult.

Matt: Yeah so, boy, you could. You could hire a whole bunch of lumberjacks and just start cutting just to cut the entire forest down until you find the other guy. Or you could use, you know, a big bulldozer. But that’s it’s very expensive and impractical. In the world of yield, what that, that, that tractor, if you will, is called failure analysis. So these are tools where they you could take a whole bunch of these failing die and dig into them literally with the interesting focused ion beams and a lot of high tech physics equipment, but they physically would dig into the chips to try to find these defects. It’s just not scalable. You know, it would just take you. It is like it is like looking for that one tree a forest of a billion trees, so it’s just not practical. Now, now what they do, I mean customers will do some sampling and they do some failure analysis to create what’s called a defect pareto. Just gonna find out on average, what’s the most types of defects that they find in some population.

Spencer: OK. So is this kind of like a problem that’s arisen as chips have gotten so much bigger because, you know, we were in into the billions and billions of transistors on a chip now but 20 years ago. That was such a was a much smaller number. And is this kind of is using machine learning almost something that’s kind of appeared naturally in your work just because of how dense and how complex things have gotten over the last few decades?

Matt: It really has, I’d say about 10 years ago we started using machine learning techniques. You know, we didn’t we didn’t call them machine learning. We just called them. Umm. Bayesian statistics with expectation algorithms and things. But that’s it is a. It’s an inference based machine learning model. Because before then, yeah. These manufacturers, they could do some failure analysis, get a good idea of what the issues were. They’re techniques were more effective. And honestly, it didn’t make a lot of sense to take on any more, more complicated analysis. But about this time, a decade or so ago even a little before that, some of the larger manufacturers started to do their own custom versions of this diagnosis, and that’s when we developed our commercially available versions and since we get to work with all of the major manufacturers in the world it’s very important for us to partner with them to understand the nitty gritty details.

Matt: It’s very confidential information, of course. So we’re very respectful of that. But what we do is we make our defect models and algorithms better based on learning from all these different customers across all these different process nodes. And so we’re able to provide better tools to them than they could actually provide themselves.

Spencer: So you mentioned kind of learning from these, all these customers with all their data and whatnot. So are these ML models that you’re using in your tools, are they pre trained? Like do you send them out pre-trained or are they trained on each node or wafer or chip that they need to look at or how does that work?

Matt: Excellent question. Because we wish that all that data could be used as kind of direct training models. So in a more of a supervised machine learning. Now, unfortunately the everybody calls defects something different among the different customers. So there’s no unique naming or tagging structure for all of this information. So we’re not able to really use any sort of supervised learning, but what it what we do is we characterize the defect modes and so we improve the algorithms that go on to the diagnosis piece. Some of that overlaps into the machine learning, but it comes in the form of mostly heuristics. Uh, and you know, tweaks and improvements to the tool that’s based on a lot of empirical knowledge.

Spencer: I see. So it sounds like it’s been kind of it’s kind of challenging to get the to integrate the data you would need to really build a like a very smart model into these systems.

Matt: Right. It’s not like image recognition where you can data mine you know millions and millions of pictures of a cat or a face. Uh, these are our signatures that are pretty, pretty unique to each one of these customers and process nodes. So that’s why it’s it takes a very close partnership with them to understand. You know we have to translate what we see from their process nodes and their products into the defects that we see and then codify that in the algorithm if you will, so it’s a it’s a pretty much, you know, it’s a, it’s a very manual process, but we haven’t found a better way of doing it just yet. Now what I would love. I would love if the industry could standardize more on how to call, you know, broken a broken wire on a certain layer of the device. But over time, as we talked about before they used to do all the failure analysis without this tool, and so each company would have their own laboratories. And their own scientists and engineers, that would sit down here. And one thing about failure analysis engineers. Because I used to work at a company that sold that type of equipment. They’re very creative and they come up with these creative names. The only standard I think defect that I’ve heard of is the mouse bite, and that’s a historically one of the classic names of a chunk of metal that’s out taken out of the side of a of a wire, a mouse bite defect.So you can imagine what other hundreds of defects could be called the different companies.

Spencer: I could imagine it being extremely difficult to train a computer to recognize that what you know, every company is calling every component and every possible defect, some internal name that only makes sense to them, and then you’re out here trying to teach a computer that’s like, OK, this particular result like failure mode or this picture or whatever. Is this thing at this company, this other thing at this company and that kind of thing. And I could see that being extremely difficult.

Matt: We have, we have lobbied with the industry on trying to make progress here, but the confounding factor is the level of sensitivity from you know, security and intellectual property. Because, you know. If you have higher yields, you’re gonna be more competitive in the market and have a higher profitability. So it’s very, very sensitive information.

Spencer: Kind of like a trade secret. So basically they don’t really want to share why they’re being so successful compared to their competitors. They wouldn’t want to share that advantage. It’s understandable given especially like you said, these are the most complex and advanced devices ever made by human hands.

Matt: But it makes for an exciting, exciting area to work in.

Spencer: Well, that is there anything else interesting that you’re working on like that you’d like to share with us right now?

Matt: Umm, yeah. I mean, we’re working on this, but it’s more of a larger industry trend. So the diagnosis piece that I’ve been talking about that is just one data type that people have available to them. So the engineers are trying to solve a yield problem. There are thousands of different parameters that they have access to, different test parameters, different design parameters, different parametric parameters. And so one thing that we are working on, we’re collaborating with a company called PDF Solutions to integrate our diagnosis and yield insight, which is the machine learning tool to integrate it into a big data platform. So that you can do cross correlations across all these different disparate data types. And we found that can help accelerate the time to root cause it can help honestly reduce that noise. We were talking about as well. And so in that case, we do have semantic model, if you will, so that the, you know the data types and where it comes from, it’s all traceable to the one particular, you know, chip that comes from. And so if you can trace all that data, you could also then cross correlate it with other data sources. So that’s pretty exciting. I mean that is ongoing. We don’t have anything you know, productized yet, but it’s pretty exciting space.

Spencer: Yeah. I mean, it sounds like it has some real potential to move things along and be the next step for you guys. But I think we’re just about out of time here, so once again, I’ve been your host, Spencer Acain and this has been the AI Spectrum Podcast Tune in next time to hear more about the exciting world of AI.

Siemens Digital Industries Software helps organizations of all sizes digitally transform using software, hardware and services from the Siemens Xcelerator business platform. Siemens’ software and the comprehensive digital twin enable companies to optimize their design, engineering and manufacturing processes to turn today’s ideas into the sustainable products of the future. From chips to entire systems, from product to process, across all industries. Siemens Digital Industries Software – Accelerating transformation.