High-Level Synthesis builds next-generation AI accelerators

AI is poised to integrate with technology at nearly every level of society but for many of those applications, existing chip designs may not be able to provide the required level of performance and efficiency. AI models are growing increasingly large with computational requirements to match and while running large numbers of high-power, general-purpose GPUs or CPUs in datacenters may be viable, for edge applications such as self-driving cars, smartphones, and other smart devices, a new approach is needed. Neural processing units (NPUs) are specifically designed chips designed to accelerate AI inferencing at a fraction of the power and size of more general-purpose GPUs but now, thanks to High-Level Synthesis (HLS) this can be taken a step further, allowing for the rapid creation of algorithm-specific chips capable of lighting fast inferences at a fraction the power.

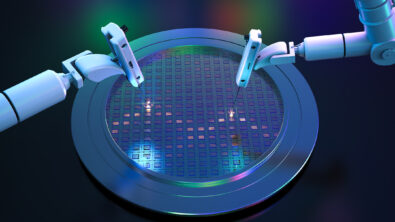

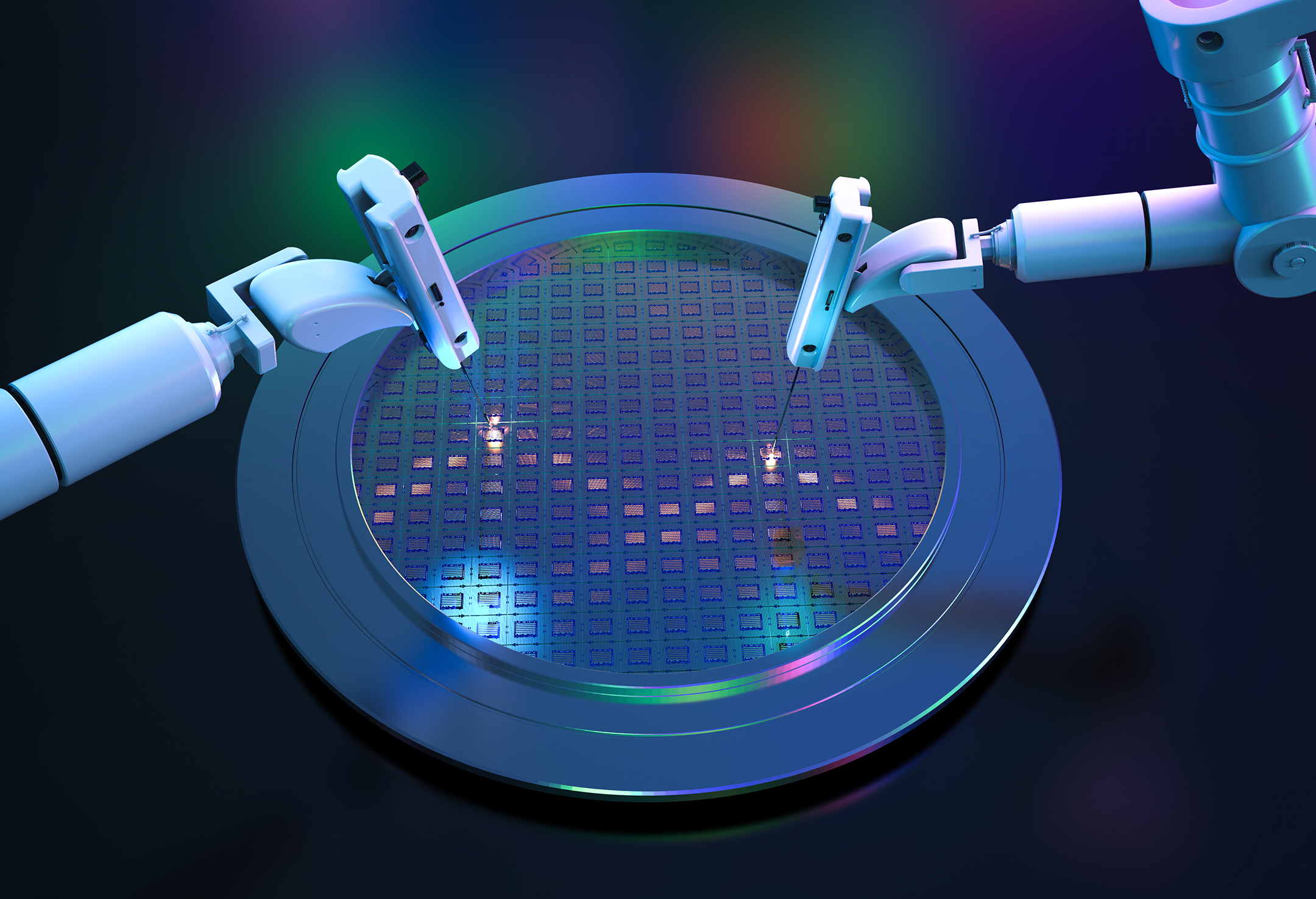

High-Level Synthesis is the process of converting algorithms, described in C++ code, into functional chip designs. Compared to traditional methods, which operate at the register transfer level (RTL) where every wire, register and operator must be described by hand, HLS operates at a higher level of abstraction with the computer laying out highly optimized designs automatically. Since AI is, at its core, just a collection of highly complex algorithms, this presents the perfect approach to developing fast, efficient chips for AI inferencing, since chips created using HLS are also more efficient than their manually created counterparts.

While HLS isn’t required to create NPUs, it is key to creating application- and algorithm-specific chips quickly and within budget. Compared to something like a GPU or a CPU, designed to run software in general, NPUs are designed specifically to accelerate the type of operations used by AI code but even then, on an application-by-application basis, NPUs can still leave a lot of performance on the table. General-purpose NPUs must work with any and all AI algorithms that a user might want to run on them, so with a need to preserve all functionality, tricks and optimizations that could improve performance for a specific algorithm, or even certain chip-wide changes can’t be deployed.

Designing and validating chips on a per-application basis using traditional RTL methods is simply impractical but, likewise, failing to improve the efficiency of AI accelerators will prevent AI from being adopted in many different areas. Using HLS to develop bespoke AI accelerators allows the traditionally slower chip design process to more closely keep pace with the rapidly developing field of AI research by greatly reducing the time it takes to bring a chip from concept to finished product. HLS not only shortens design cycles and makes it easier to validate completed designs thanks to its reliance on robust automation, but it also offers the potential to help find new and innovative chip designs as well.

In the traditional chip design process, it is rare for more than a single family of designs to be considered simply because developing a completely new design from scratch is a prohibitively expensive and time-consuming process. Now, using HLS tools instead, completely new designs can be generated easily by simply modifying directives within the software before the computer translates the C++ code into a proposed chip design. This means dozens or hundreds more designs could be considered in the same amount of time, potentially leading to the discovery of faster or more efficient ways of designing accelerators for a particular algorithm.

HLS tools, such as Catapult offered by Siemens EDA, will be a critical in designing not only high-power, custom AI accelerators for datacenters but the tiny, embedded chips that will power the next generation of smart, AI-driven devices. With the advent of power-hungry large language models and the desire to scale them to everything from massive models like ChatGPT or Gemini down to a smartphone or laptop, the need for highly efficient AI accelerators will only continue to grow. As everything from hardware to software grows smarter each year, it will be key supporting technologies like HLS that must rise to meet the challenges that will present, to learn more about what HLS is and how it will apply to AI in the future, check out this article.

Siemens Digital Industries Software helps organizations of all sizes digitally transform using software, hardware and services from the Siemens Xcelerator business platform. Siemens’ software and the comprehensive digital twin enable companies to optimize their design, engineering and manufacturing processes to turn today’s ideas into the sustainable products of the future. From chips to entire systems, from product to process, across all industries. Siemens Digital Industries Software – Accelerating transformation.