ISO 26262 fault analysis – worst case is really the worst

Worst case analysis is often a self-fulfilling prophecy: By preparing for the worst you actually make it happen. In our previous post about ISO 26262 faults we high-lighted the importance of accurate fault analysis, and explained why defaulting to worst case could lead to overdesign, extra-costs, schedule delays, higher risk to core functionality, bankruptcy, layoffs, disease and death ;-). In this post, we will start from the most elementary worst case analysis, and refine it until it deserves to be called smart.

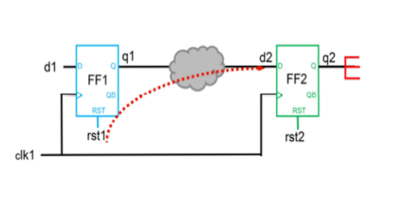

Recap: to get a design ISO certified, you need to prove that the risk of safety goal violation due to random hardware faults is less that a tiny threshold. Though the probability of a gate going crazy is much smaller than the tiny threshold, the safety goal, is likely to be influenced by much more than one gate. Hence the very small probability per gate, will be multiplied by *something*. What this *something* will be, is at the heart of our discussion.

The most elementary worst case analysis you could do is to assume that any random hardware fault in any gate in your design will cause a safety goal violation. That will basically set *something* to the number of gates in your design, and will most probably bring you way over that tiny threshold required for ISO 26262 certification.

One level above, but not enough to be called smart yet, is setting *something* to all gates that actually have a physical connection to logic related to the safety goal. In just about any design, some gates (debug, status) will lack physical connection to the safety critical area. Hence faults in these gates will never impact the safety goal and could be left out of the calculation, usually reducing *something* by 10-20%. Along the same vein, you’ll usually also find some logic that, even though connected to safety critical areas, can’t influence them under certain input assumptions. Formal tools can smell such logic from miles, and can easily get you another 5% off.

Adding a gray scale to our black and white analysis is a big step up in pain and in gain. Instead of asking if a gate can or can’t influence the safety goal, we ask what are the gate’s chances of influencing the safety goal. If we take an OR gate for example, as long as one input is ‘1’, the other inputs could go as crazy as they want. If one input is ‘1’ for 99.9% of the time, faults in anything connected to other inputs could be left out. That’s where the big bucks are hiding.

How do we know the percentage of time in which a given gate input actually has impact on its output? Well, if you’re running a rigorous regression on your DUT (and if you’re shooting for ISO 26262 you probably are) that information should be within reach. The only question is how to store it, extract it, and factor it into your calculation. If you know how to do it, you’re hereby declared pretty smart. If not, continue reading more on our cookbook.

Comments