Tools In A Methodology Toolbox

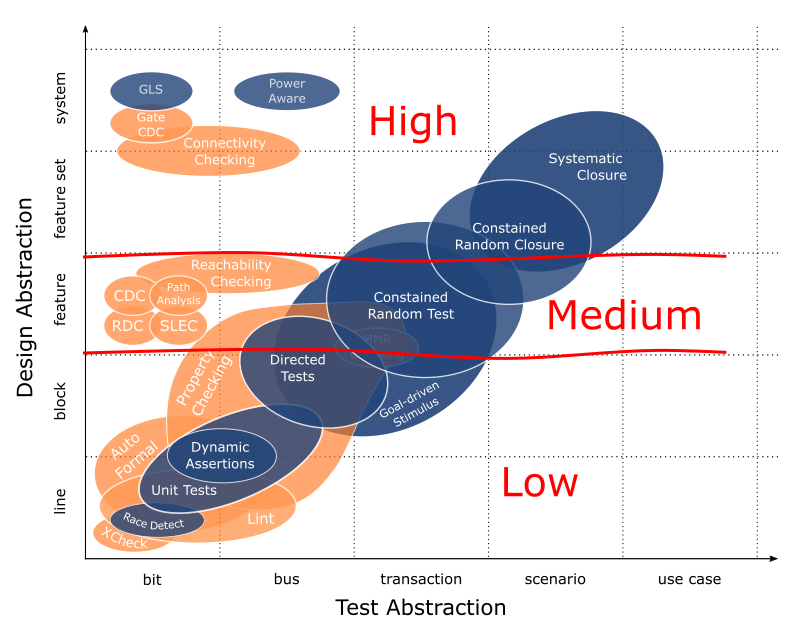

To understand how techniques fit together as part of a comprehensive verification methodology, we’re mapping techniques as a function of design abstraction they’re intended to address and verification abstraction through which they’re best applied. This is what we started in Verification Methodology Reset. At the end of that I promised to come back and describe the colour coding I used and the stuff in the bubbles so that’s what we’ll do here.

For review, this is the graphic we’re working from…

The colours represent the underlying engine that powers each technique. Blue is simulation, orange is formal.

I should say I waffled on the colour coding because this discussion needs to be engine agnostic. Part of how we develop tunnel vision in verification – and incomplete methodologies – is by tuning out discussions that don’t support our own experience. For example, aside from a week-long formal tool eval back in 2003, I’ve completely ignored all things formal. Simulation is the only thing I’ve ever known. My ignorance – let’s call it what it is – has held me back for a long time and I’m incomplete as a result.

I don’t want people to be held back anymore. We’ve long passed the point where formal is strictly for formal specialists. Likewise for constrained random and others. We no longer need to be experts to reap the benefits of a particular practice so be careful not to infer too much from the colour coding. It’s for crude – and increasingly obsolete – association only; nothing more.

To the bubbles themselves, we’ll split the discussion into three parts, first set apply to lowest level line/block design abstractions, second focuses on the middle feature abstraction and third is high-level feature set/system (I have the low, medium and high scribbled over the graphic for reference). Considering part of the point here is to pull people of all verification backgrounds together, we’ll cover everything but just briefly. I’ll give you a description of what’s in each bubble with an idea of relative complexity and its requirements in terms of expertise. I have techniques grouped where appropriate based on effort and scope. You’ll see I’ve also split techniques for focus (see: constrained random test and constrained random closure). Admittedly, the descriptions sound a bit mechanical, but I did that to maintain equal representation between them and avoid unintentionally asserting my own biases.

Finally, as you read on you should see a theme emerge, that a complete methodology requires a complementary deployment of multiple techniques. At each abstraction, we have options for comprehensive, high effort techniques focused on detailed design intent and low effort techniques to trap unintended implementation choices and outcomes. Some overlap where noted, but everything has a specific purpose. Saw to cut lumber, hammer to pound nails, cordless to drive screws, etc.

—

Line/Block Abstractions

X Checking – Race Detection

Targeted, low effort techniques include X checking and dynamic race detection. X checking relies on formal exploration of a design to find undriven signals. Race detection dynamically captures write-write and read-write collisions that result in non-deterministic evaluation of a signal. X checking can happen stand alone while dynamic race detection is passively deployed in simulation. Both are low effort techniques that are easily integrated. Neithers require experienced users.

Lint – Autoformal

General, low effort techniques include lint and autoformal. They capture unintended design constructs and language usage. As such, they flag a variety of issues; lint through static code analysis and autoformal through formal property checking. Some overlap exists between the two techniques though they are complementary with autoformal being an extension of lint. Both are low effort techniques that are easily integrated. Neither requires experienced users.

Unit Tests – Property Checking – Directed Tests

Comprehensive, high effort techniques include unit testing, property checking and directed testing. All are employed toward exhaustively verifying low level design intent. Typically, unit testing is applied to individual design units prior to integration through pin/bus level interactions, directed testing is applied to subsystems using bus level interactions and property checking may be applied to either design units and/or subsystems through pin/bus level properties. The goal of unit testing is exhaustive pin/bus level functionality while property checking and directed tests focus on subsystem sanity. All require infrastructure to varying degree. Intermediate to expert level experience – and corresponding design knowledge – is required.

Dynamic Assertions

A passive, moderate effort technique is dynamic assertions. Focus again is design intent though unlike other line/block techniques, dynamic assertions are passively deployed through simulation. Dynamic assertions are complementary in that they expedite diagnosis and triage of pin/bus level simulation failures. Intermediate to expert level experience is required. Embedded assertions require white box design knowledge.

—

Feature Abstraction

Property Checking – Directed Tests

Continuing from line/block but with a few differences with respect to purpose… comprehensive, high effort techniques include property checking and directed testing. Property checking and directed tests build forward from a sanity baseline, continuing through the known feature space with input considerations for full transactions and multiple transactions. At the feature level, both require moderate to expert level experience.

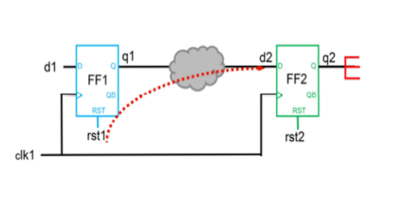

Clock Domain Checking (CDC) – Reset Domain Checking (RDC)

Targeted, low effort techniques for clock and reset verification are CDC and RDC. Both are specifically targeted toward designs with multiple clock and reset domains. Both are easily integrated but also require detailed knowledge of clock and reset circuitry.

Path Analysis – Memory Mapped Register (MMR) Checking

Targeted, moderate effort techniques are path analysis and memory mapped register checking. Path analysis verifies secure access – or no access – to critical nodes within a design. MMR checking verifies intended register access across the full memory map. Both techniques are easily integrated however they also require secure access and MMR definitions respectively.

Reachability Checking

Reachability checking is a general, low effort technique for finding dead code within a design. It requires no experience or design knowledge to use.

Sequential Logic Equivalence Checking (SLEC)

SLEC is a general, low to moderate effort technique for verifying sequential equivalence of two RTL modules. Specific flows that use equivalence checking relate to low power clock gating, fault mitigation logic and ECOs. Effort and expertise required is proportional to intended differences in implementation.

Goal-Driven Stimulus – Constrained Random Testing

Comprehensive, very high effort techniques at the feature level are goal-driven stimulus and constrained random testing. The increase in effort corresponds to the addition of infrastructure for self-checking and stimulus generation. Goal-driven stimulus comprehensively covers a specified feature space; likewise for constrained random with randomization that takes a design beyond the known feature space. The primary objectives of both are bug finding. Tests cover multiple transactions with variability across those transactions. Moderate to expert level experience is required.

—

Feature Set/System Abstraction

Connectivity Checking

Connectivity checking is a general, moderate effort technique applied primarily to SoC level or within large subsystems to formally verify connectivity within them. No specific expertise required. Does require a complete definition for point-to-point integration connections within the DUT.

Gate CDC

Gate CDC is the same targeted, low effort technique as CDC for verifying clock domain crossings but it’s applied to gate level netlists.

Gate Level Simulation (GLS)

Gate level simulation is a general, high effort technique where existing tests from simulation are re-purposed to gate-level netlists. Gate-level sims verify general functionality but more importantly the timing and synchronization sensitive aspects of a design. It requires moderate to high level expertise – specifically debug expertise.

Power Aware

Power aware verification is a targeted, moderate effort technique applicable to designs that require active power management. The focus is verifying power domain partitioning/protection and correct operation during transitions between power states including power-up and power-down. Power aware is deployed as an additional dimension to simulation or emulation platforms. It requires intermediate to expert level expertise for capturing power management architecture in UPF (or equivalent).

Systematic Closure – Constrained Random Closure

Similar description to goal-driven stimulus and constrained random test, except closure implies design quality stabilized such that effort moves beyond bug hunting and into coverage closure. Same infrastructure required with additions for functional coverage, more rigorous stimulus and longer running and/or more targeted tests. Systematic closure incorporates intelligent randomization through the addition of portable stimulus. Both techniques focus on exhaustively exercising integrated feature sets while systematic closure stretches into the system abstraction.

—

That was a lot but we’re still just getting started. Next post we transition all this stuff to a timeline. We’ll see when tools kick in, where they overlap and how they complement each other across a development cycle.

-neil

Comments