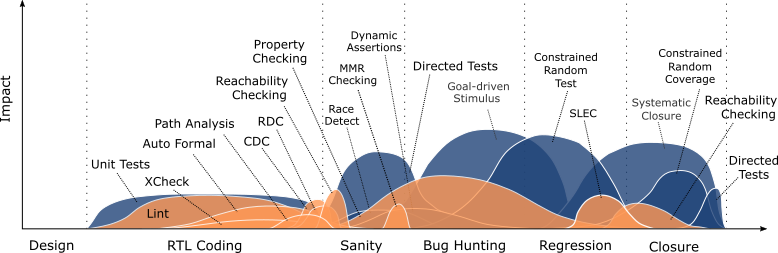

The Ideal Verification Timeline

Our discussion around building integrated verification methodologies started with where techniques apply to design by plotting options for verifying low, medium and high-level abstractions. That was one step to understanding how techniques fit together in a complete methodology. Today we take the next step with a timeline for when all these techniques apply.

When I started putting this timeline together I intended it to be generally applicable. That only lasted one conversation. Fellow product engineer Matthew Balance immediately pointed out a generally applicable timeline isn’t possible. At a minimum, he suggested, a timeline depends on the scope of the test subject; are we looking at a timeline for subsystem development or an entire SoC?

Thanks to Matthew, a subsystem timeline is what we’re looking at for now; SoC timeline is TBD. What it shows are all the techniques at our disposal, a recommendation for when each is most effective and the relative impact we should expect. I loosely define impact as value of new results (i.e. portion of design verified, number of bugs found, etc).

The curve for each technique describes how its impact grows, peaks, then tapers off as the value of new results diminish.

The pace at which impact builds for a particular technique is proportional to its infrastructure dependencies and/or the state of the design. As an example for infrastructure, constrained random test has large infrastructure dependencies so its impact grows slowly. Reachability checking, on the other hand, is deployed without infrastructure so it ramps up very quickly. With respect to design state, that may apply to the size of the code base or its quality. The impact of lint, for example, grows slowly at first with the size of the code base then flattens out to incrementally deliver new value every time lint is run. In contrast, constrained random coverage grows slowly as the quality of the design improves and more of the state space can be explored without hitting bugs.

The relative height of the peaks is subjective. After many discussions and tweaks to this graphic, I think the height and duration of each peak pretty accurately captures what we’d see if we averaged the entire industry. That said, I’m certain someone out there will curse me for suggesting the impact of property checking is less than they’ve experienced or take exception to a perceived bias toward constrained random testing. I’ve done my best to capture relative impact while acknowledging case-by-case mileage may vary tremendously.

Time on the x-axis is split into development stages. Design is the before-any-coding-happens step. It’s not relevant to this discussion yet but I expect it to become relevant when we talk about infrastructure down the road. For now, just consider it as the start line. RTL coding is where the design is built. Sanity marks the first functional baseline for the subsystem where focus is on design bring-up and pipe cleaning features with happy path stimulus. The bug hunting phase is where we start flexing the design beyond the happy path. We expect to find bugs at this point – which is why I call it bug hunting. As the design matures, we transition into the regression phase. Focus here is still on testing; new and longer tests that push the design into darker corners of the state space. Last is closure where focus turns to complete state space coverage through comprehensive stimulus and analysis.

Two sets of recommendations I intend for people to find in the timeline:

- When a technique is ideally applied in a development cycle; and

- When a technique is ideally applied relative to other techniques.

For example, I’m recommending X checking has the greatest impact after RTL coding is well underway right up until its complete. Further, I’m recommending X checking best applied before directed testing or constrained random simulation begins. This isn’t to say X checking couldn’t be useful at the very end, but its impact would be far less because most X’s would have already been discovered – much more painfully discovered I should add – by other techniques.

A couple other points on steps in the timeline that will further drive decisions on which techniques are used and when…

The most dangerous stage on our timeline is bug hunting because it’s thoroughly unpredictable. According to my favourite Wilson Research Group data from 2018, verification engineers estimated 44% of their effort on debug (i.e. bug hunting). Debug cycles are non-deterministic; some bugs take an hour to fix, others take a week. The fewer bugs you have, the less time you waste on bug hunting, the more predictable your progress. A complete verification methodology prioritizes predictability which means squeezing this part of the timeline. Higher quality inputs to the bug hunting phase is one way to do that.

The most underrated step in the timeline: RTL coding. Coming back to the same Wilson Research Group data point from 2018, verification engineers estimated only 19% of their time is spent developing testbenches. The disparity between development and debug gives the impression that we’re rushing to get testbenches written – a predictable activity – so we can quickly get to fixing them – an unpredictable activity. We don’t have the same granular breakdown for design engineers but we do have a data point from the same survey that shows design engineers spend almost half their time doing verification. Hard to draw a strong conclusion from that, but I think there’s enough anecdotal evidence kicking around to suggest much of that time is spent debugging tests; the same unpredictable activity. So assuming we’re all involved in bug hunting, I’d like to see us displace some of that effort with proactive verification during RTL (and testbench) coding. Looking at the timeline, there are eight techniques to choose from.

That’s a quick overview of the timeline with an explanation of how I’ve modeled the impact of techniques and where they’re placed in time. I’ll be back in the next few weeks to pull the timeline apart and get a better feel for what’s possible. Until then, I’d like to hear peoples’ thoughts on how techniques are positioned on the timeline, their impact and how they complement each other. If you’ve got any strong opinions, here’s your chance to let it out!

-neil

Comments

Leave a Reply

You must be logged in to post a comment.

Great post Neil. It brings the verification discussion to places where I haven’t seen it before. There is one thing of information I think would be very interesting if available. Where are bugs found vs where they could have been found if the right verification technique was used properly at an earlier phase? I think that would be an interesting question for future Wilson studies. Are the late bugs due to complex dependencies only visible when everything comes together or are they simple highly localized issues that could have been detected earlier?

thanks lars! this one did feel pretty good so I’m glad to hear I’m pushing the boundaries. for your follow-up, I haven’t quite figured out how best to model differences in when bugs are found v. could have been found but I am working on it. if/when I figure it out, you’ll see it here 🙂

-neil

Great post Neil, as always well detailed, in the past I’ve had many discussions trying to convince people to do this kind of exercise on our projects, always getting the same response we don’t have time we’ll do it on the next project…

I’d something related to early silicon debug testing, since sometimes this affects the entire timeline.